¡ɹǝpun uʍop ɯoɹɟ ʎɐppᴉפ

- 0 Posts

- 28 Comments

- Scan it with AV. This might still product false positives, so understand the difference between viruses and PUPs.

- Go with keygens if at all possible. Run them in a sandbox, like sandboxie-plus.

- Only download cracks from trusted sites, and from trusted scene groups.

- Preferably check the crack with a MD5 or CRC, so you know its not been tampered with.

In 2017, most content was h264 and 1080p. This typically made a movie about 10GB with just 5.1 sound. Same movie with DTS 7.1 and possibly 5.1 etc, would be 12-16GB. Today That same 16GB movie with H265 would be 6-8GB.

The thing is that now that movies are typically 4K and ATMOS etc (which would have been 30+GB in 2017. For the same given “quality” and bitrate settings, that movie would be ~15GB.

The thing we are seeing now in Usenet/scene releases, is that those quality settings are being pushed up. Due to unlimited internet per month and H265, allowing better quality.

So with that in mind, the answer to your question is, yes and no. I can give you an example: Fast X. I can see a UHD 4K HDR10 TrueHD for 61GB, and all the way down to 2.5GB!!

So now you get to have a choice! :D (Oh, and you can also see the traditional H264 1080p as still sitting at the around 10GB, and the basic 4K version using H265 is only 13GB)

Jellyfin or Kodi (FOSS), or Emby (Better than Plex, not FOSS, but developer is very responsive) or Plex (bottom of the rung, corporate money grabbers).

Run one of those on your media server, and grab the client for your phone, or tv device.

TV device can be chromecast, roku, whatever (nVidia shield is expensive but it does support DTS and stuff - if you need that (does your TV room have 6+ speakers?)

Personally, I like Emby. It just works.

So your connection can be (using port 587 for example) is encrypted. That is not the problem.

The problem is unencrypted or open filenames. Its the content that is DMCA’d on usenet, not the end user.

If you post up to usenet a file (being really simplistic since most people dont post) called “Deadpool3-h264.mkv” it will be taken down pretty quick on some services.

The problem is only with the users like you, who wanted to get it. The solution to this is threefold, get yourself onto a private scraper (one that only does occasional free signups) or be really fast on content grabbing, thirdly a site that has good de-obfuscating scraping ability. (there are a few out there that are open. pm if you are interested).

I couldn’t find the example I had in mind, but it was something that was over 120GB. and the unpack was 8+hrs on what is arguably a reasonable PC.

You forget most people do not have a CPU that has 24 threads, 12 cores. And on top of that, the amount of RAM required can be questioned. If you think your 64GB RAM and 16/32 CPU are “normal” then you are just kidding yourself, and its probably not your money you are spending on it to but it.

Then on top of that, most people have an ISP that can reach far higher speeds than you propose. Mine is a basic minimum of 25MB/s as a basic minimum. I’m sorry to hear that you cannot get even that, but that is as common as muck in most western civilised worlds.

Seriously? This is an actual question?

Exhibit 1:

Check for yourself: https://www.av-test.org/en/antivirus/home-windows/

If “Protection” is not the highest score, its shit. The other two (perf/use) are not so important generally, but I would say a score of 6,6,6 is far superior to anything 5.5,X,Y. Probably also means the company isn’t a rolling rock gathering moss. (“We make AV, and we keep it up to date” as opposed to “we are a megacorp, and profits overrule everything else”).

Do you want a simple answer?

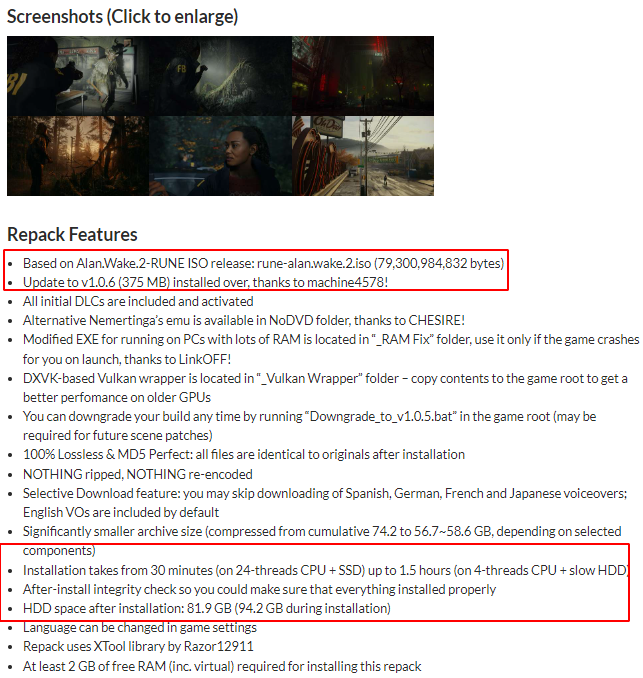

fitgirl repacks attempt to stress your cpu/gpu to the maximum and try to overheat your beloved PC, by making the data for the game astronomically compressed to the point where the decompression time can actually take longer than the time to download the original crack on USENET by as much as 4-8x.

If you have USENET, don’t even bother. If you have unlimited Data on your ISP, just torrent the un-“packed” version. You will thank me in the longer time. Its not worth it in most cases.

Here is an example of a 75GB repack that makes it 56GB:

Did the time it took you to save <20GB download save you the additional 1.5 hrs to decompress?!

Okay, if the repack is under 20-30GB, its probably worth a shot. But even then…

- Usenet provider. I suggest if you make the jump, then Newshosting is a great option. Why: 5557 days of retention, up to 100 connections, and unlimited download (on highest plan $15/month).

Also Newshosting offers a FREE TRIAL. From there T&C - “Free Trial ends in 14 days or 30GB used, whichever comes first.” You might have to do a CC upfront, but just cancel it the next day, and run the free 2 weeks.

-

Will you find Italian content. YES, there is tons of it. How do I know? Because I host a Usenet indexer/scraper, and I see everything that is scraped on my server. English, German and Italian are among the top 5 languages.

-

NZBFind. That is a scraper/indexer. You still need a Usenet provider. The indexers just provide you with the NZB, you need the usenet provider (configured in NZBGet or whatever your download *arrr is) to get all the “parts” (binary posts) and assemble them together.

I hope your will and testament are up to date, you are basically screwed.

Parents crying at the hole they put you down in the cemetery. Girls you knew, secretly confessing that they would have ‘done you - together, at the same time’ whilst eroding the earth with their tears. Boys from the same grade, sobbing wishing they were just like you, to get “those other girls back there”. That mate of yours that always was a bit weird, who rocked up with his AK47 and 250 rounds of ammo “just in case”.

FBI staking it all out, and waiting for them to disperse, so they can dig you up, and take your corpse to A51 and dissect you to see how many rings you have to determine your age, and if you have links to piratebay.

Here you go, someones (slightly horrid) basic layout of how it can work.

You do not have to run a local of Musicbrainz (I do - because I can, it removes API limits but its expensive in storage and data) just point to the public instance. Also you could do Headphones, but I moved away from that years ago and have had a much happier experience with Lidarr.

So Lidarr/NZBGet (or whatever you use) are pretty straight forward.

It gets complex with beets. Not that it is inherently complex, it just has an absolute shit ton of options. You want to start with a yaml config, and just get the feel of how it operates. There are lots of “howto’s” online, but unfortunately “beets” is a way to simple search. So you need to beef it with some specifics related to ahem music.

The manual and github do have it well documented. I would suggest starting with a subset of your collection, and just tinkering, (move files from /home/a to /home/b, convert to mp3 and fix ID3). It comes together pretty quickly. But the configurables of beets is crazy (in a good way).

Other things like triggering scans from LMS etc, they are documented on their respective sites.

I’ll fess up - its not immediately for the faint hearted, but its probably not that hard for most people - who actually read documents and learn.

I use Lidarr for most music grabs. spotdl when Lidarr fails to find (which is uncommon since I use usenet). Then I use beets to manage music files https://github.com/beetbox/beets .

I have beets setup to run as a cron every 10 min, and it looks in the location that nzbget downloads to, and it automatically converts, fixes ID3s via musicbrainz db, and moves the completed files to my music section. Anything that beets doesnt see as a 95% match, I then manually run the script and choose the correct musicbrainz ID for the band/album.

Is google that stupid that this will not bring on an onslaught of abuse to circumvent their false god status of the internet? I have already been “part 3’d” and completely bypassed it.

They get jackshit from me now at all. At least before they may have made some revenue from me for viewing the videos, now I am a ghost. No money for anyone. (and in case you are gonna say - I dont care content makers are losing, they are all dumbarses, and if they are supporting yt still and not posting elsewhere, then they are just stupid cunts. The only reason google is doing this is to make more money off of the people who make content, but not upset their already unstable revenue income. If the content providers get antsy and leave, YT loses.

Sonarr and Radarr are essentially tailored webpages. You will probably not get both running on one Pi Zero W nicely, due to ram constraints (A Pi Zero 2W would be okay). However One of those apps on a Pi Zero W would be fine, but given enough swap its possible that both would run (albeit slowish, but who cares? its automated so you don’t have to! Doesn’t matter if the “grab” takes a couple more seconds than normally)

There would be a couple of things to note, firstly a slim starting distro, and remove stuff unnecessary. Secondly, you would go the nginx route rather than Apache2 since it is far more lightweight and less RAM overhead. Finally make sure you have a swap area on your memory (preferably not on SD, but you have little choice) The OS (assuming Debian or whatever) will be able to swap out other processes when memory gets low for the active process.

Finally, transfer would be fine using wifi, alongside the browsing data but this is likely to stutter on occasions when you are browsing whilst it is transferring an NZB etc to nzbget or whatever your dldr is. (NZB’s these days can be quite large, especially for a 60GB BR rip type grab.) For torrents you’d probably not notice.

The PI Zero 2 W, is essentially a RPi3b with less ram but with multicores, so it would be more competent (due to being about to switch out to swap faster/more efficiently). But there is no reason why Pi Zero W could not run one of those apps (maybe both, depending on the local db sizes). After all, they are only single threaded anyway, its just how the OS works with them.

(Most of the posts in this thread obviously have little to no knowledge of how Linux works, or the capabilities of the hardware.)