English

English- •

- 2d

- •

- mFat English

- •

- 12h

- •

- @Maroon@lemmy.world English

- •

- 1d

- •

English

English- •

- 2d

- •

- @hempster@lemm.ee English

- •

- 1d

- •

- @chagall@lemmy.world English

- •

- github.com

- •

- 1d

- •

- @CTDummy@lemm.ee English

- •

- 2d

- •

English

English- •

- 3d

- •

- @Tinnitus@lemmy.world English

- •

- 2d

- •

- @GravitySpoiled@lemmy.ml English

- •

- 3d

- •

English

English- •

- 3d

- •

English

English- •

- www.theguardian.com

- •

- 4d

- •

- @dont@lemmy.world English

- •

- 3d

- •

- @Dust0741@lemmy.world English

- •

- 2d

- •

English

English- •

- ericthomas.ca

- •

- 4d

- •

English

English- •

- 3d

- •

- @LunchMoneyThief@links.hackliberty.org English

- •

- 3d

- •

English

English- •

- github.com

- •

- 10d

- •

English

English- •

- 5d

- •

- @yeldarb12@r.nf English

- •

- 10d

- •

English

English- •

- github.com

- •

- 6d

- •

- @Moonrise2473@feddit.it English

- •

- 11d

- •

- @someoneFromInternet@lemmy.ml English

- •

- 5d

- •

- @Im_old@lemmy.world English

- •

- 7d

- •

English

English- •

- 6d

- •

English

English- •

- 12d

- •

- @Railison@aussie.zone English

- •

- 11d

- •

English

English- •

- blog.krafting.net

- •

- 10d

- •

- @vgnmnky@lemmy.world English

- •

- 6d

- •

- @sillyhatsonly@lemmy.blahaj.zone English

- •

- 5d

- •

English

English- •

- 12d

- •

- @bandwidthcrisis@lemmy.world English

- •

- 13d

- •

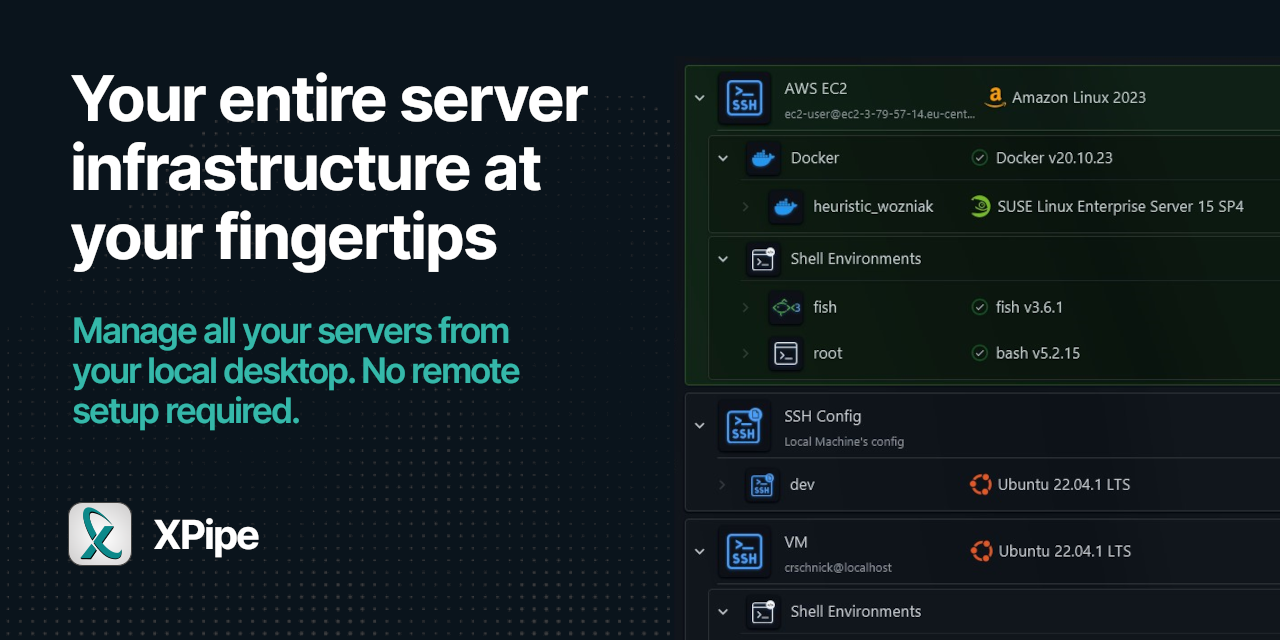

- @crschnick@sh.itjust.works English

- •

- github.com

- •

- 15d

- •

English

English- •

- 13d

- •

- @HamalaKarris@lemmy.world English

- •

- 12d

- •

Selfhosted

!selfhosted@lemmy.worldA place to share alternatives to popular online services that can be self-hosted without giving up privacy or locking you into a service you don’t control.

Rules:

-

Be civil: we’re here to support and learn from one another. Insults won’t be tolerated. Flame wars are frowned upon.

-

No spam posting.

-

Posts have to be centered around self-hosting. There are other communities for discussing hardware or home computing. If it’s not obvious why your post topic revolves around selfhosting, please include details to make it clear.

-

Don’t duplicate the full text of your blog or github here. Just post the link for folks to click.

-

Submission headline should match the article title (don’t cherry-pick information from the title to fit your agenda).

-

No trolling.

Resources:

- awesome-selfhosted software

- awesome-sysadmin resources

- Self-Hosted Podcast from Jupiter Broadcasting

Any issues on the community? Report it using the report flag.

Questions? DM the mods!

- 0 users online

- 184 users / day

- 401 users / week

- 1.08K users / month

- 3.97K users / 6 months

- 1 subscriber

- 3.56K Posts

- 71.5K Comments

- Modlog

Lemmy.World

A generic Lemmy server for everyone to use.

The World’s Internet Frontpage Lemmy.World is a general-purpose Lemmy instance of various topics, for the entire world to use.

Be polite and follow the rules ⚖ https://legal.lemmy.world/tos

Get started

See the Getting Started Guide

Donations 💗

If you would like to make a donation to support the cost of running this platform, please do so at the following donation URLs.

If you can, please use / switch to Ko-Fi, it has the lowest fees for us

Join the team 😎

Check out our team page to join

Questions / Issues

-

Reporting is to be done via the reporting button under a post/comment.

-

Please note, you will NOT be able to comment or post while on a VPN or Tor connection

More Lemmy.World

Follow us for server news 🐘

Chat 🗨

Alternative UIs

- https://a.lemmy.world - Alexandrite UI

- https://photon.lemmy.world - Photon UI

- https://m.lemmy.world - Voyager mobile UI

- https://old.lemmy.world - A familiar UI

Monitoring / Stats 🌐

Service Status 🔥

https://status.lemmy.world

Lemmy.World is part of the FediHosting Foundation