- 10 Posts

- 906 Comments

Maybe this will help you: https://linuxcontainers.org/incus/docs/main/backup/

How are snapshots with ZFS on Incus?

What do you mean? They work, described here, the WebUI can also make snapshots for you.

You should consider replacing Proxmox with LXD/Incus because, depending in your needs, you might be able to replace your Proxmox instances with Incus and avoid a few headaches in the future.

While being free and open-source software, Proxmox requires a payed license for the stable version and updates. Furthermore the Proxmox guys have been found to withhold important security updates from non-stable (not paying) users for weeks.

Incus / LXD is an alternative that offers most of the Proxmox’s functionality while being fully open-source – 100% free and it can be installed on most Linux systems. You can create clusters, download, manage and create OS images, run backups and restores, bootstrap things with cloud-init, move containers and VMs between servers (even live sometimes).

Incus also provides a unified experience to deal with both LXC containers and VMs, no need to learn two different tools / APIs as the same commands and options will be used to manage both. Even profiles defining storage, network resources and other policies can be shared and applied across both containers and VMs. The same thing can’t be said about Proxmox, while it tries to make things smoother there are a few inconsistencies and incompatibilities there.

Incus is free can be installed on any clean Debian system with little to no overhead and on the release of Debian 13 it will be included on the repositories.

Another interesting advantage of Incus is that you can move containers and VMs between hosts with different base kernels and Linux distros. If you’ve bought into the immutable distro movement you can also have your hosts run an immutable with Incus on top.

Incus Under Debian 12

If you’re on stable Debian 12 then you’ve a couple of options:

- Run the LXD version provided on their repositories: this will give you LXD 5.0.2 LTS that is guaranteed to be compatible with Debian 13’s Incus. Note that this was added before Canonical decided to move LXD in-house;

- Use the backported version as described here: https://linuxcontainers.org/incus/docs/main/installing/;

- Get the latest Incus pre-compiled from https://github.com/zabbly/incus and install as described above.

In the first option you’ll get a Debian 12 stable system with a stable LXD 5.0.2 LTS, it works really well however it doesn’t provide a WebUI. The second and third options will give you the latest Incus but they might not be as stable. Personally I was running LXD from Snap since Debian 10, and moved to LXD 5.0.2 LTS repository under Debian 12 because I don’t care about the WebUI. I can see how some people, particularly those coming from Proxmox, would like the WebUI so getting the latest Incus might be a good option.

I believe most people running Proxmox today will, eventually, move to Incus and never look back, I just hope they do before Proxmox GmbH changes their licensing schemes or something fails. If you don’t require all features of Proxmox then Incus works way better with less overhead, is true open-source, requires no subscriptions, and doesn’t delay important security updates.

Note that modern versions of Proxmox already use LXC containers so why not move to Incus that is made by the same people? Why keep dragging all of the Proxmox overhead and potencial issues?

Too much pieces that can potentially break. I’ve been looking at http://nginx.org/en/docs/http/ngx_http_auth_request_module.html and there’s this https://github.com/kendokan/phpAuthRequest that is way more self contained and simple to maintain long term. The only issue I’m facing with that solution is that I’m yet capable of passing a token / username in a header to the final application.

- TPLink Tapo line - I own those, requires internet / cloud access for setup, then can be viewed by any ONVIF capable software, VLC etc. You can cut their internet access and they mostly work, however timestamps and some features may break randomly;

- Reolink / AMCrest - no internet required, can be setup offline AND have a WebUI that allows full control over all functionality. Check the details of specific models, may vary a bit.

AMCrest is most likely be most offline friendly brand. Here’s a testimonial from another user:

I’ve been using Amcrest and foscam IP cameras at my home for the past several years. I have then connected to a no internet VLAN with an NVR. The models I’ve been using have an ethernet port and wifi. Setup was connecting to the ethernet port and then accessing the web ui in a browser to configure settings (most importantly turning on RTSP or ONVIF feeds)

I’ve been looking into some kind of simple SSO to handle this. I’m tired of entering passwords (even if it’s all done by the password manager) a single authentication point with a single user would be great.

Keycloak and friend are way too complex. Ideally I would like to have something in my nginx reverse proxies that would handle authentication at that level and tell the final app what user is logged on in some safe way.

it’s possible to have an email client download all the messages from Gmail and remove them from the server. I would like to set up a service on my servers to do that and then act as mail server for my clients. Gmail would still be the outgoing relay and the always-on remote mailbox, but emails would eventually be stored locally where I have plenty of space.

Do you really need this extra server? Why not just configure the account on Thunderbird and move the older / archival mail to a local folder? Or even drag and drop it out of Thunderbird to a folder and store the resulting files somewhere?

I’m just asking this because most people won’t need regular access to very old email and just storing the files on a NAS or something makes it easier.

They’re devices usually require a ui.com account and linking the device. As some people already said it you’ll still require cloud connection to setup the device even if standalone by using their mobile or desktop app. Doesn’t seem like a good choice for someone who’s into privacy and self hosting.

It is somewhat sensitive, at least wireless device names, network/switch setup, MAC addresses and LED/ GPIO settings are going to be different - almost always (and this list is far from complete).

Usually what I do is I take the config and merge it manually (Beyond Compare), to the default config of a new unit, that way I can adjust the interfaces and other details.

To be fair I only do this because I tend to deploy OpenWRT on customers quite a lot and something I don’t have a config for some specific hardware already done. A router is basically a fridge, it should last a long time and even if you’ve to manually configure everything it won’t be much of an issue 5 or 10 years later.

I’ve done my fair share of long runs of Cat6e 23 AWG with PoE and they all work fine and gigabit on distances like 100 meters or close. Sometimes even slightly above that.

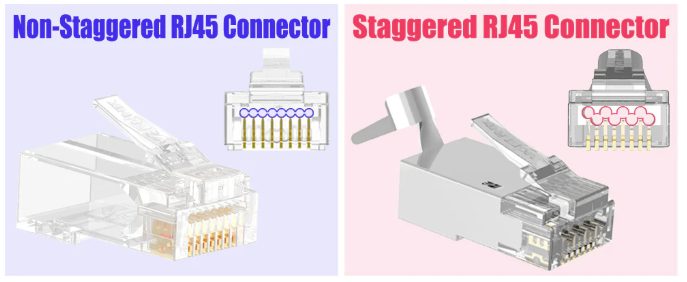

Staggered will reduce the failure rate by a lot, specially if you’re into gigabit speeds or anything above it. Although I know from experience that you can get gigabit on non-staggered connectors it won’t always happen on the first try. On long distances the noise caused by having the wires side by side may also cause problems.

Btw, if you’ve small patch cables don’t use solid core for those, those should be stranded cables and they’ll be more flexible, less likely to break when bent and less prone to bad contacts.

CCA wasn’t probably your issue there, CCA is actually becoming the standard everywhere because copper is way too expensive and to be fair not needed with modern hardware.

You most likely issue with that CCA is the AWG size you picked, cheap cable is usually 24, 26 or even 28 AWG and those will be bad.

If you want PoE or anything gigabit or above you need to pick 23 AWG. This is considerably cheaper than full copper and it will work fine for the max. rated 100m. Either way, cheap 26 AWG should be able to deliver gigabit and PoE at short distances like 20 meters or so.

Another important thing is to make sure your terminations are properly done and the plugs are good. Meaning, no Cat5e connectors should be used, always use staggered ones:

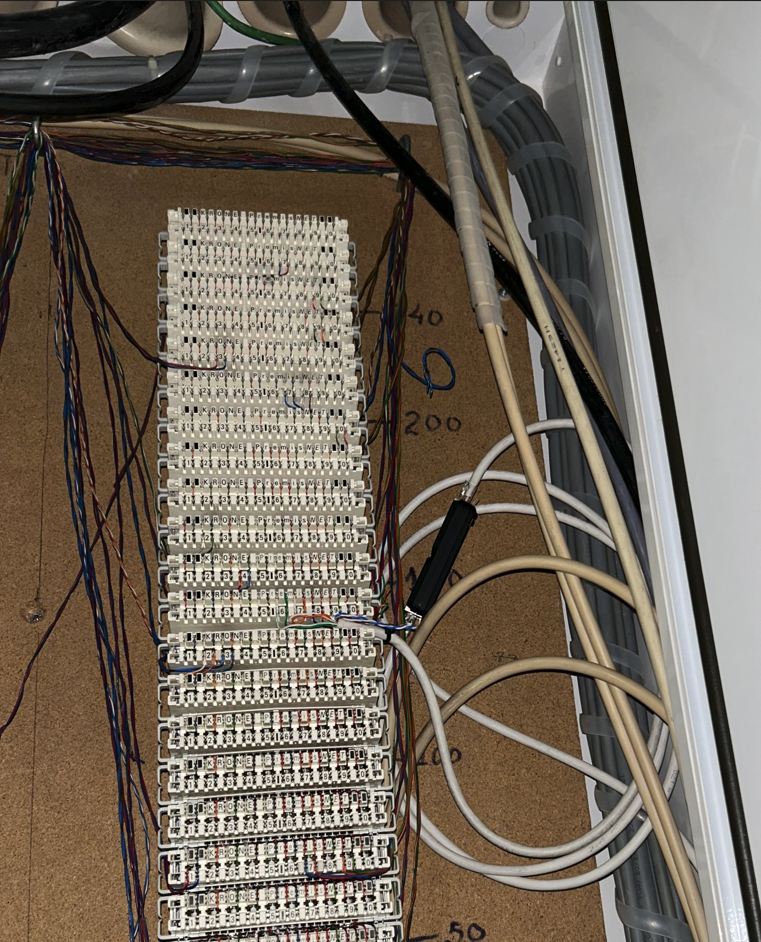

I’ve a run of around 60 meters of old telephone cables (made out of copper, 4 wires) and I can get 100Mbps on those reliably. I used the old telephone infrastructure on the building to pass network from an apartment to the basement that way.

Not to spec on ethernet on any way, not even twisted pairs but they do work. Unfortunately I can’t replace the run with a proper Cat6 cable because there’s a section that I can’t find where it goes to, it just disappears on a floor and appears 2 floors bellow it inside the main telephone distribution box.

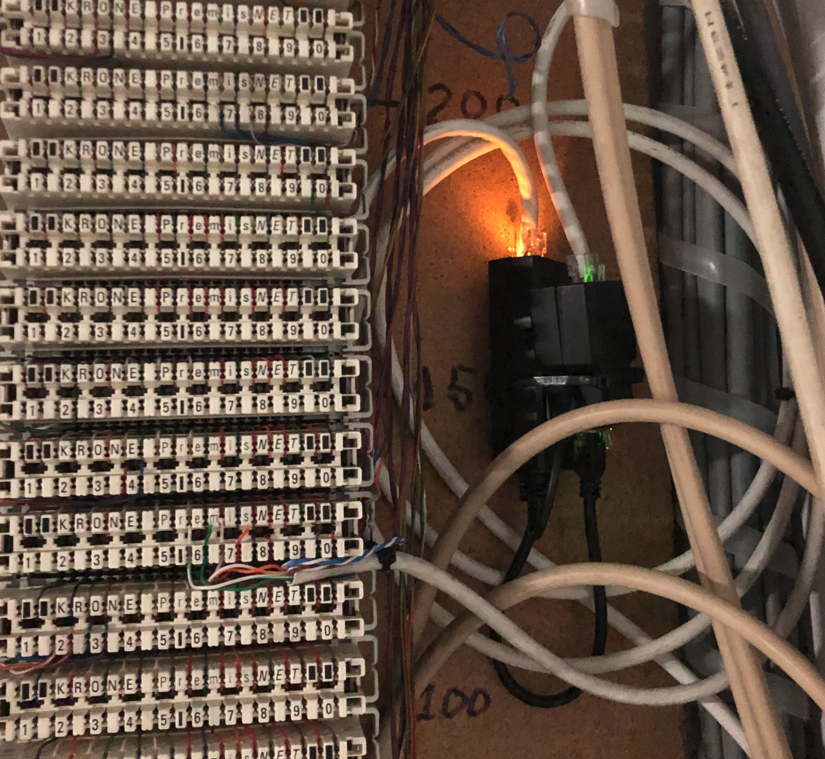

On the distribution box (that is already on the basement of the building) I’m plugging into the LSA connector that goes to the apartment:

The black box you see there is a mikrotik gper that is essentially a PoE switch with only 2 ethernet ports so I can get over the 100m limitation of ethernet. I’m running a cat6 cable from there on metal cable trays for about another 90 meters until it reaches a storage unit 2 floors bellow ground.

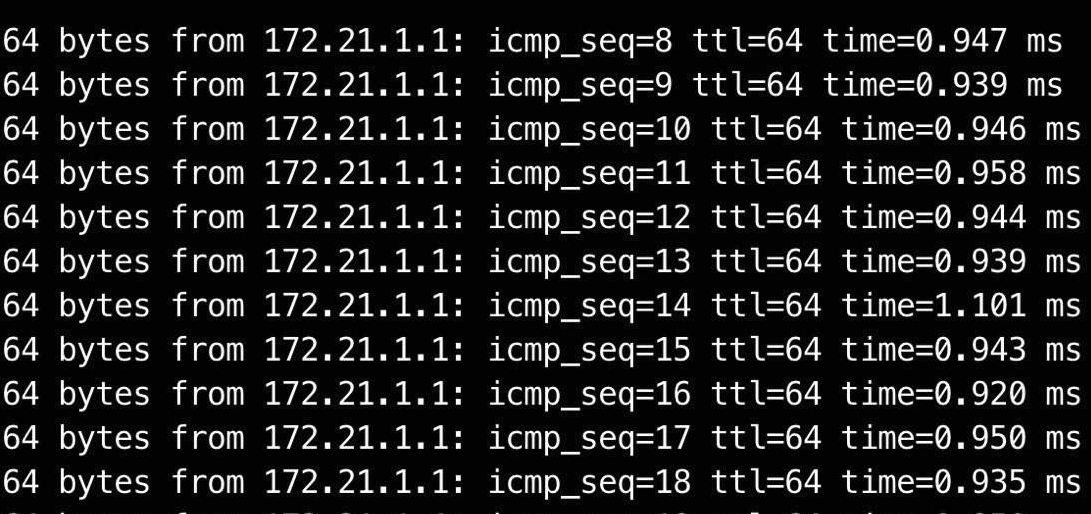

Here’s a ping test from a machine sitting on the storage box to the router on the apartment:

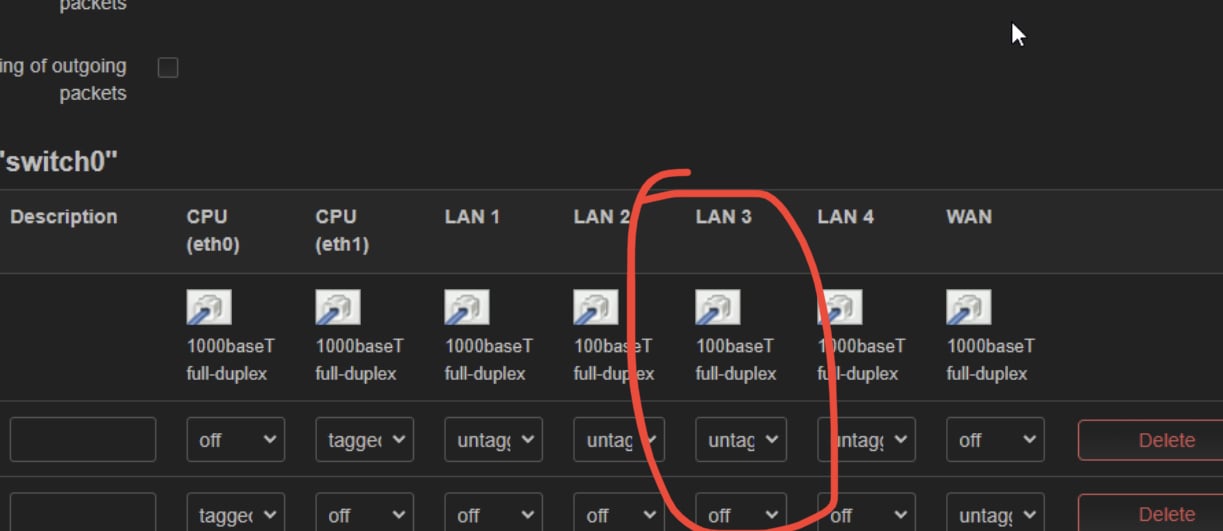

The router reports this as a 100M full-duplex connection:

If anyone wants to try a setup like this, or just extend ethernet > 100m, it also worked fine with a cheap 5$ 100M switch from Aliexpress and a PoE injector + splitter.

However I eventually got the mikrotik gper for free so I decided to replace it because it should be more reliable.

Yes but… tests are done in controlled environments and ideal conditions, there are big real world differences with CCA vs fully copper or those solid core options vs stranded ones. They’ll all perform differently depending on distances, noise immunity will vary and will break differently in different ways when tension is applied. You can also get Cat5e on different AWG sizes, all spec compliant but all very different from each other.

The bottom line is: it all comes down to how much you’re willing to spend.

Here’s the thing, you now know you’ve to replace the battery every 3 years or so, but I’ve SONY batteries running for 5 years and they’re fine. Now, your motherboard’s manufacturer is a piece of shit, the board should’ve just ignored the issue and proceeded to the OS. All modern operating systems sync the UEFI time whenever they get time from NTP so no need to halt the boot process.

I would go with Gitea or Forgejo (not sure how this is going to last) if you need a complete experience like a WebUI, issues, PR, roles and whatnot.

If you’re looking for just a git server then gitolite is very good and solid option. The cool thing about this one is that you create your repositories and add users using a repository inside the thing itself :).

Then you can use any Windows GUI you would like like Fork, SourceTree, your IDE etc…

That explanation is misleading because:

- OpenWrt does firewalling and routing very well;

- If you’ve a small / normal network and OpenWrt will provide you with a much cleaner open-source experience and also allow for all the customization you would like;

- There are routers specifically made to run OpenWrt, so it isn’t only a replacement firmware.

Free Dyndns services seem to be a bit crap

Why do you say that? https://freedns.afraid.org/ and https://www.duckdns.org are very solid and if you’re looking for something more corporate even Cloudflare offers that service for free.

No-IP

Don’t recommend that. There are plenty of better alternatives such as https://freedns.afraid.org/ and https://www.duckdns.org/ that aren’t run by predatory companies that may pull the plug like DynDNS did.

That looks like a DDoS, for instance that doesn’t ever happen on my ISP as they have some kind of DDoS protection running akin to what we would see on a decent cloud provider. Not sure of what tech they’re using, but there’s for certainly some kind of rate limiting there.

- Isolate the server from your main network as much as possible. If possible have then on a different public IP either using a VLAN or better yet with an entire physical network just for that - avoids VLAN hopping attacks and DDoS attacks to the server that will also take your internet down;

In my case I can simply have a bridged setup where my Internet router get’s one public IP and the exposed services get another / different public IP. If there’s ever a DDoS, the server might be hammered with request and go down but unless they exhaust my full bandwidth my home network won’t be affected.

Another advantage of having a bridged setup with multiple IPs is that when there’s a DDoS/bruteforce then your router won’t have to process all the requests coming in, they’ll get dispatched directly to your server without wasting your router’s CPU.

As we can see this thing about exposing IPs depends on very specific implementation detail of your ISP or your setup so… it may or may not be dangerous.

Oh well, If you think you’re good with Docker go ahead use it, it does work but has its own dark side…

cause its like a micro Linux you can reliably bring up and take down on demand

If that’s what you’re looking for maybe a look Incus/LXD/LXC or systemd-nspawn will be interesting for you.

I hope the rest can help you have a more secure setup. :)

Another thing that you can consider is: instead of exposing your services directly to the internet use a VPS a tunnel / reverse proxy for your local services. This way only the VPS IP will be exposed to the public (and will be a static and stable IP) and nobody can access the services directly.

client —> VPS —> local server

The TL;DR is installing a Wireguard “server” on the VPS and then have your local server connect to it. Then set something like nginx on the VPS to accept traffic on port 80/443 and forward to whatever you’ve running on the home server through the tunnel.

I personally don’t think there’s much risk with exposing your home IP as part of your self hosting but some people do. It also depends on what protection your ISP may offer and how likely do you think a DDoS attack is. If you ISP provides you with a dynamic IP it may not even matter as a simple router reboot should give you a new IP.

It depends on what you’re self-hosting and If you want / need it exposed to the Internet or not. When it comes to software the hype is currently setup a minimal Linux box (old computer, NAS, Raspberry Pi) and then install everything using Docker containers. I don’t like this Docker trend because it 1) leads you towards a dependence on property repositories and 2) robs you from the experience of learning Linux (more here) but I it does lower the bar to newcomers and let’s you setup something really fast. In my opinion you should be very skeptical about everything that is “sold to the masses”, just go with a simple Debian system (command line only) SSH into it and install what you really need, take your time to learn Linux and whatnot.

Strictly speaking about security: if we’re talking about LAN only things are easy and you don’t have much to worry about as everything will be inside your network thus protected by your router’s NAT/Firewall.

For internet facing services your basic requirements are:

- Some kind of domain / subdomain payed or free;

- Preferably Home ISP that has provides public IP addresses - no CGNAT BS;

- Ideally a static IP at home, but you can do just fine with a dynamic DNS service such as https://freedns.afraid.org/.

Quick setup guide and checklist:

- Create your subdomain for the dynamic DNS service https://freedns.afraid.org/ and install the daemon on the server - will update your domain with your dynamic IP when it changes;

- List what ports you need remote access to;

- Isolate the server from your main network as much as possible. If possible have then on a different public IP either using a VLAN or better yet with an entire physical network just for that - avoids VLAN hopping attacks and DDoS attacks to the server that will also take your internet down;

- If you’re using VLANs then configure your switch properly. Decent switches allows you to restrict the WebUI to a certain VLAN / physical port - this will make sure if your server is hacked they won’t be able to access the Switch’s UI and reconfigure their own port to access the entire network. Note that cheap TP-Link switches usually don’t have a way to specify this;

- Configure your ISP router to assign a static local IP to the server and port forward what’s supposed to be exposed to the internet to the server;

- Only expose required services (nginx, game server, program x) to the Internet us. Everything else such as SSH, configuration interfaces and whatnot can be moved to another private network and/or a WireGuard VPN you can connect to when you want to manage the server;

- Use custom ports with 5 digits for everything - something like 23901 (up to 65535) to make your service(s) harder to find;

- Disable IPv6? Might be easier than dealing with a dual stack firewall and/or other complexities;

- Use nftables / iptables / another firewall and set it to drop everything but those ports you need for services and management VPN access to work - 10 minute guide;

- Configure nftables to only allow traffic coming from public IP addresses (IPs outside your home network IP / VPN range) to the Wireguard or required services port - this will protect your server if by some mistake the router starts forwarding more traffic from the internet to the server than it should;

- Configure nftables to restrict what countries are allowed to access your server. Most likely you only need to allow incoming connections from your country and more details here.

Realistically speaking if you’re doing this just for a few friends why not require them to access the server through WireGuard VPN? This will reduce the risk a LOT and won’t probably impact the performance. Here a decent setup guide and you might use this GUI to add/remove clients easily.

Don’t be afraid to expose the Wireguard port because if someone tried to connect and they don’t authenticate with the right key the server will silently drop the packets.

Now if your ISP doesn’t provide you with a public IP / port forwarding abilities you may want to read this in order to find why you should avoid Cloudflare tunnels and how to setup and alternative / more private solution.

English

English- •

- domainnamewire.com

- •

- 6M

- •

English

English- •

- 8M

- •

English

English- •

- github.com

- •

- 8M

- •

English

English- •

- www.cnx-software.com

- •

- 10M

- •

English

English- •

- tadeubento.com

- •

- 1Y

- •

English

English- •

- tadeubento.com

- •

- 1Y

- •

English

English- •

- joshmadison.com

- •

- 1Y

- •

English

English- •

- 1Y

- •

json spec draft 7