- 0 Posts

- 42 Comments

The default tier of AWS glacier uses tape, which is why data retrieval takes a few hours from when you submit the request to when you can actually download the data, and costs a lot.

AFAIK Glacier is unlikely to be tape based. A bunch of offline drives is more realistic scenario. But generally it’s not public knowledge unless you found some trustworthy source for the tape theory?

Let me be more clear: devs are not required to release binaries at all. Bit they should, if they want their work to be widely used.

Yeah, but that’s not there reality of the situation. Docker images is what drives wide adoption. Docker is also great development tool if one needs to test stuff quickly, so the Dockerfile is there from the very beginning and thus providing image is almost for free.

Binaries are more involved because suddenly you have multiple OSes, libc, musl,… it’s not always easy to build statically linked binary (and it’s also often bad idea) So it’s much less likely to happen. If you tried just running statically linked binary on NixOS, you probably know it’s not as simple as chmod a+x.

I also fully agree with you that curl+pipe+bash random stuff should be banned as awful practice and that is much worse than containers in general. But posting instructions on forums and websites is not per se dangerous or a bad practice. Following them blindly is, but there is still people not wearing seatbelts in cars or helmets on bikes, so…

Exactly what I’m saying. People will do stupid stuff and containers have nothing to do with it.

Chmod 777 should be banned in any case, but that steams from containers usage (due to wrongly built images) more than anything else, so I guess you are biting your own cookie here.

Most of the time it’s not necessary at all. People just have “allow everything, because I have no idea where the problem could be”. Containers frequently run as root, so I’d say the chmod is not necessary.

In a world where containers are the only proposed solution, I believe something will be taken from us all.

I think you mean images not containers? I don’t think anything will be taken, image is just easy to provide, if there is no binary provided, there would likely be no binary even without docker.

In fact IIRC this practice of providing binaries is relatively new trend. (Popularized by Go I think) Back in the days you got source code and perhaps Makefile. If you were lucky a debian/src directory with code to build your package. And there was no lack of freedom.

On one hand you complain about docker images making people dumb on another you complain about absence of pre-compiled binary instead of learning how to build stuff you run. A bit of a double standard.

I don’t agree with the premise of your comment about containers. I think most of the downsides you listed are misplaced.

First of all they make the user dumber. Instead of learning something new, you blindly “compose pull & up” your way. Easy, but it’s dumbifier and that’s not a good thing.

I’d argue, that actually using containers properly requires very solid Linux skills. If someone indeed blindly “compose pull & up” their stuff, this is no different than blind curl | sudo bash which is still very common. People are going to muddle through the installation copy pasting stuff no matter what. I don’t see why containers and compose files would be any different than pipe to bash or random reddit comment with “step by step instructions”. Look at any forum where end users aren’t technically strong and you’ll see the same (emulation forums, raspberry pi based stuff, home automation,…) - random shell scripts, rm -rf this ; chmod 777 that

Containers are just another piece of software that someone can and will run blindly. But I don’t see why you’d single them out here.

Second, there is a dangerous trend where projects only release containers, and that’s bad for freedom of choice

As a developer I can’t agree here. The docker images (not “containers” to be precise) are not there replacing deb packages. They are there because it’s easy to provide image. It’s much harder to release a set of debs, rpms and whatnot for distribution the developer isn’t even using. The other options wouldn’t even be there in the first place, because there’s only so many hours in a day and my open source work is not paying my bills most of the time. (patches and continued maintenance is of course welcome) So the alternative would be just the source code, which you still get. No one is limiting your options there. If anything the Dockerfile at least shows exactly how you can build the software yourself even without using docker. It’s just bash script with extra isolation.

I am aware that you can download an image and extract the files inside, that’s more an hack than a solution.

Yeah please don’t do that. It’s probably not a good idea. Just build the binary or whatever you’re trying to use yourself. The binaries in image often depend on libraries inside said image which can be different from your system.

Third, with containers you are forced to use whatever deployment the devs have chosen for you. Maybe I don’t want 10 postgres instances one for each service, or maybe I already have my nginx reverse proxy or so.

It might be easier (effort-wise) but you’re certainly not forced. At the very least you can clone the repo and just edit the Dockerfile to your liking. With compose file it’s the same story, just edit the thing. Or don’t use it at all. I frequently use compose file just for reference/documentation and run software as a set of systemd units in Nix. You do you. You don’t have to follow a path that someone paved if you don’t like the destination. Remember that it’s often someone’s free time that paid for this path, they are not obliged to provide perfect solution for you. They are not taking anything away from you by providing solution that someone else can use.

I don’t have much experience with TS, but in other strongly typed language it goes even further than string vs number.

For example you can have two numbers Distance and TimeInSeconds and even though they are both numbers, the type system can make sure that you won’t do distance+time.

It can also let you do distance/time and return Speed type.

It will prevent many logical errors even though everything is technically a number.

I’m not sure where this idea of high profile target comes from. The sim swap attack is pretty common. People just need to be in some credentials leak DB with some hint of crypto trading or having some somewhat interesting social media account. (either interesting handle or larger number of followers)

There are now organized groups that essentially provide sim swap as a service. Sometimes employees of the telco company are in on it. The barrier to entry is not that high, so the expected reward does not need to be that much higher.

In Tailscale you can set up an exit node which lets you access the entire internet via its internet connection.

You could set up an exit node that would let you access the internet via some (anonymizing) VPN providers like Mullvad or any other.

This sounds like Tailscale is simply setting up this exit node for Mullvad on their side and providing it as a service. So it’s not like using another VPN anonymizers is impossible, it’s just convenient to use Mullvad.

RAID is not backup. RAID is used for increased capacity, throughput or uptime. (Depending on configuration)

Multiple volumes would likely get corrupted just as much with faulty RAM as RAID would. Besides RAM there’s controller, CPU, power supply and possibly more single points of failure in that NAS, that would destroy both RAID and multiple volumes.

So assuming you have external backup, I’d go with RAID for better uptime as opposed to some custom multi volume pseudo-RAID for the same.

Yeah it’s pretty amazing system all things considered. It’s kind of as if 8-bit home computer systems continued to evolve, but keep the same principles of being really closely tied to the HW and with very blurry line between kernel and user space. It radiates strong user ownership of the system. If you look at modern systems where you sometimes don’t even get superuser privileges (for better of worse) it’s quite a contrast.

Which is why it reminds me of Emacs so much. You can mess with most of the internals, there’s no major separation between “Emacs-space” and userspace. There are these jokes about Emacs being OS, but it really does remind me of those early days of home computing where you could tinker with low level stuff and there were no guardrails or locks stopping you.

I couldn’t help but think of Emacs when I was reading A Constructive Look At TempleOS. It’s like TempleOS that is actually finished, it just lacks kernel.

If it’s really early 2000s, you might want to put it on eBay. There are retro gamers out there that could use it as good Windows 9x era gaming PC. You could give that HW a new life in someone’s retro setup.

It’s great HW for occasional gaming, but it’s very inefficient for 24/7 operation. You want to be somewhere after 2015-ish for something that is supposed to run constantly.

Perhaps it’s kind of inevitable to have some bloat. For example apps these days handle most of the languages just fine including emoji, LTR/RTL and stuff. Some have pretty decent accessibility support. They can render pretty complicated interface at 8k screen reasonably fast. (often accelerated in some way) There is a ton of functionality baked in - your editor can render your html or markdown side by side with source code as you edit it. You have version control, terminal emulator, language servers, etc…

But then there’s Electron, which just takes engine capable of rendering anything and uses it to render UI, so as a result there’s not much optimization you can do. Button is actually a bunch of DOM elements wrapped in CSS… Etc… It’s just good enough for the “hardware is cheap” approach.

I think Emacs is a good example to look at. It has a ton of built in functionality and with many plugins (either custom configuration or something like Doom Emacs) you can have very capable editor very comparable to the likes of VS Code. Decades back Emacs had this reputation of being bloated, because it used Megabytes of RAM. These days it’s even more “bloated” due to all the stuff that was added since. But in absolute numbers it does not need as much resources as its Electron based peers. The difference can easily be order of magnitude or more depending on configuration.

The author is upset that btrfs RAID arrays don’t function as he anticipated. However, btrfs isn’t ZFS or mdadm; it’s its own system and should be understood as such.

I’d say it’s quite reasonable critique, because RAID1 is kind of industry standard. I can’t think of any other RAID (HW or SW) that would do RAID1 in this way. If btrfs decided to call their implementation raid1 while it really isn’t raid1 in some major way, it was very bad idea. I don’t agree it’s documentation issue, it’s really bad name choice. ZFS has raidz that does something similar to btrfs raid1 and the name does not lead to confusion. RAID1 system should never lead to decreased reliability with increasing number of drives.

The author points out that btrfs won’t auto-mount an array if a drive fails, while ZFS will. This is actually a protective measure. By not auto-mounting, it minimizes the risk of further drive failures, prioritizing data preservation.

RAID is uptime preserving mechanism. If anyone uses RAID for data preservation purposes, they are setting themselves for a nasty surprise. RAID system that does not mount in reduced redundancy situation is very bad design. It effectively sacrifices usability of RAID to serve other purpose that RAID system does not really need nor should be used for.

He attempts ZFS recovery methods on btrfs and is surprised when they don’t work.

I felt that way as well, but I think they raised one important point - there was no indication that the array was still in reduced redundancy state after their “attempt at recovery”. ZFS is very clear about the state of array at every step. Same for other RAID systems including some HW based ones. Every single one I’ve used were very clear about the fact that array isn’t fully redundant.

In summary, the article’s author seems primarily upset that btrfs isn’t a ZFS clone.

FWIW I didn’t have that impression. I have experience with multiple RAID controllers and multiple SW RAID systems and his points would be valid with any of those.

Anyways thank you for your reply. It’s not the answer I was hoping for and I don’t agree with your views on some of these issues. But it gives me pretty good idea of the current state of the filesystem.

Out of curiosity in your experience, are issues mentioned in this article actually fixed now? They mention the write hole, so that was fixed. What about the rest?

I’d say it’s more about elasticity. Scaling is just very narrow aspect of elasticity.

To give you some specific example, there’s a company (that I won’t name) that by law has to have all data on premises. They have local cloud in their own datacentre. Part of that cloud is a set of powerful servers with ton of GPUs. Daytime they spin up VMs that employees can log into and have remote desktop for graphically intensive tasks.

Now you might be thinking “wait a second, they can’t easily add GPUs in the morning as employees log in, there is no scaling and thus no cloud!” And by that definition you’d be right. But what they do with their cloud is that as the demand for VDI drops in the evening, they will start allocating the GPU and CPU resources to completely different kind of VMs that do overnight data crunching. (think geospatial data) It’s completely different OS, the servers are in server subnet, not VDI network, etc… So they are using the elasticity, but it’s not just scaling.

Another counterexample is pretty frequent issue on AWS, where they momentarily run out of specific instance type in specific region. AWS support “will do their best” but you’re often looking at hours of wait time before you get your instance. Now depending where you live you could go buy a server and deploy it in your own DC faster than that. Has AWS stopped being cloud provider? No, you can use the elasticity and either spawn different instance type (if your workload allows that) or in different region/AZ. You might have been just trying to replace one instance with another, not even trying to scale up, it’s just the capacity for replacement wasn’t there.

For some definition of cloud. You also have on premises cloud. When Amazon runs their e-commerce site on AWS, are they running it on someone else’s computer or not in cloud? (putting aside some tax-wise separation of individual Amazon subsidiaries)

On the other hand there are still providers that will rent you an server in their DC, but you don’t get any API or anything else. At best they’ll plug in HDDs that you sent them. This server hosting existed before “cloud” was a thing and it continues to exist.

I’d say that more accurate definition of cloud would be “someone else’s computer with an API that customer can access”. And if I’m really strict about that definition I’d drop entire first part, because it’s the API that matters - computer might as well be yours.

Source: I’ve been on both sides of cloud from the very beginning.

You can self host. They even have official lemmy community at !joplinapp@sopuli.xyz

I really like their embrace of open source. Seeing their email app on f-droid first is quite refreshing. And when they started developing it, I just subscribed to github issues with features I considered crucial for me so that I’d get notification once they were implemented.

How often do you get at least changelog with closed source apps? I’d have to check every couple months whether they implemented features I need had this not been developed in the open.

In context of self hosting it’s probably worth pointing out, that SQLite specifically mentions NFS on their How To Corrupt An SQLite Database File page.

SQLite is used in many popular services people run at home. Often as only or default option, because it does not require external service to work.

I haven’t seen anyone recommend Infomaniak Mail. I think it’s great option. It’s €1.50/month for 5 mailboxes with unlimited storage. You can add multiple domains and mailbox aliases for free. (no limit on either as far as I can tell) You get calendar and contacts as well. They also offer entire office suite, but that’s going to cost more.

They offer pretty good webmail interface, that’s not just Roundcube or other OSS webmail solutions. (which are okay, but usually limited by the fact that it’s IMAP on the backend) They offer apps for mobile calendar/contact sync and they also have (quite new, but already very good IMO) email app. These are all open source. You obviously have IMAP, CalDAV and such if you want to use your own client.

It’s not some one man show provider, they are pretty big cloud provider in Switzerland. So you also get custommer support that from my experience is pretty fast to respond.

I use Telegraf for most of the metrics.

It looks interesting as an app, but in context of self-hosting there are couple of speed bumps:

- The server side is quite complicated. (compared to Joplin for example) It’s multiple services and it also needs Mongo, Redis and S3. Makes sense for them to do it this way to be able to scale up, but for few users hosted locally it’s quite a lot of moving parts.

- You need to compile client apps to self-host. This effectively kills this as an option on iOS.

I was kind of the same, but I still collected metrics, because I just love graphs.

Over time I ended up setting alerts for failures I wish I was aware of earlier. Some examples:

- HDD monitoring - usually drive is showing signs of failure couple days before it fails, so I have time to shop around for replacement. If I had no alert set, I’d probably only notice when both sides of a mirror failed which would mean couple days of downtime, lot of work with backup restoration and very limited time to find drive for reasonable price

- networking issues - especially VPN, it’s much better to know that it is broken before you leave house

- some core services like DNS. With two Adguard instances it’s much better to be alerted when one is down, than to realize that you suddenly have no DNS when both fail and you can’t even google stuff without messing with your connection settings.

- SSD writes - same as HDDs, but in this case the alert is around 90% declared TBW lifetime claimed by manufacturer and I tend to replace them proactively as they are usually used as system disk without mirror, which holds no valuable data, but would again lead to extended unplanned downtime

- CPU usage being maxed out for long time - I had one service fail in a way where it consumed 100% of all cores. This had no impact on other services because process scheduler did its job, but I ended up burning kilowats of electricity as this continued unnoticed for weeks. This was before energy prices went up, but it was still noticeable power consumption. (Had double CPU server back then, that consumed a lot of juice when maxed out)

That’s fair. Wonder why that is, because my experience is quite the opposite.

The metrics I shared above actually had the Pihole running on much more powerful HW. (proper server with quite beefy CPU) The Adguard stats are from old Intel NUC which is perfomance-wise about on par with Rpi3B+. As you can see it barely uses any resources at all. So I’m surprised to see you reporting the performance as really bad.

I was testing Adguard on small openwrt based device and it still ran fine. Rpi3B+ has order of magnitude faster HW than that. I just don’t see how would Adguard be slower or even noticeably slow. Or even Pihole. Both could run about 40 copies of the service on single Pi3.

Whis is not to say I don’t trust you, it’s just strange.

You can’t really go wrong with any of those. They are both very solid options. Having said that, if I had to recommend one, I’d go with Adguard, because:

- The interface is better. Most notably the query log interface. Searching the logs with some long time span makes Pihole spike in memory usage and is super slow. (there’s no server-side pagination)

- Custom filters are more powerful thanks to modifiers, which AFAIK Pihole does not support. Some of it can be configured via dnsmasq (without user friendly interface), some I had not found any solution for. Good example is dnstype modifier, which I sometimes use to block AAAA responses for sites, that have set AAAA records, but the service actually does not work over IPv6. So I can disable IPv6 for certain domains if I need to. (or other way around, force IPv6 only)

Some of the above might have changed, I haven’t used Pihole for about a year.

And also it is heavier

I can’t say I’m seeing the same.

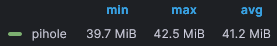

Pihole CPU and memory usage (these are 1 week stats):

Same for Adguard:

So both are kind of the same unless you run on very limited hardware. The docker images are about 100MB for Pihole and 20MB for Adguard. This is probably most important parameter as you can run Adguard on some routers, that have very limited flash storage, but again only matters on extremely limited HW, something like Raspberry Pi has orders of magnitude more resources.

First of all they use much more than the device IP to identify individual devices. IPv4 is no longer all that useful for identification with things like CGNAT being common.

But with IPv6 they’ll see my device IP, then they’ll see the same device with completely different IP, then again. Same for my kid’s device. But again, all of the above applies. It is a concern, but there are much better ways of tracking you anyways.

It kind of depends on what are your priorities. In my experience it’s usually much easier to upgrade to latest version from previous version, than to jump couple versions ahead, because you didn’t have time doing upgrades recently…

When you think about it, from the development point of view, the upgrade from previous to last version is the most tested path. The developers of the service probably did exactly this upgrade themselves. Many users probably did the same and reported bugs. When you’re upgrading from version released many months ago to the current stable, you might be the only one with such combination of versions. The devs are also much more likely to consider all the changes that were introduced between the latest versions.

If you encounter issue upgrading, how many people will experience the same problem with your specific versions combination? How likely are you to see issue on GitHub compared to a bunch of people that are always upgrading to latest?

Also moving between latest versions, there’s only limited set of changes to consider if you encounter issues. If you jumped 30 versions ahead, you might end up spending quite some time figuring out which version introduced the breaking change.

Also no matter how carefully you look at it, there’s always a chance that the upgrade fails and you’ll have to rollback. So if you don’t mind a little downtime, you can just let the automation do the job and at worst you’ll do the rollback from backup.

It’s also pretty good litmus test. If service regularly breaks when upgrading to latest without any good reason, perhaps it isn’t mature enough yet.

We’re obviously talking about home lab where time is sometimes limited, but some downtime usually not a problem.

First of all. Thank you for civil discussion. As you say this is weird place to have such discussion, but it’s also true that these jokes often have some kernel of truth to them that makes these discussions happen organically.

So with that out of the way and with no bad intentions on my side:

I’ve noticed you use Dockerfiles and Docker Images interchangeably. And this might be the core of misunderstanding here. What I was describing is that:

- Developer builds an image (using Dockerfile or otherwise) on their laptop and then pushes that image to a Docker repository.

- This exact same image is then used in CI to do integration tests, scanning, whatever…

- If all is good, this image is then deployed to production.

So if you compare sha of the image in production and on developers laptop, they are the same checksums. Files are identical. Nix arrives to this destination kind of from the other side. Arguably in more elegant way, but in both cases files are the same.

This was the promise (or one possibility) in the early days of Docker. Obviously there are some problems with this approach. Like what if CPU architecture of the laptop differs from production server? Well that wasn’t a problem back in 2014, because ARM servers just didn’t exist. (Not in any meaningful way) There’s also this disconnection between the code that generates the image and the image itself, that goes to production. How do you trust environment (laptop) where image is built. Etc… So it just didn’t stick as a deployment pattern.

Many of these things Nix solves. But in terms of “it works on my laptop” what I wrote in previous comment applies. The environment differences themselves rather than slightly different build artefacts is what’s frequently the problem. Nix is not going to solve the problem of slightly different databases because developer is runing MariaDB locally to test, but in production we use DB managed by AWS. Developer is not going to catch this quirky behavior of how his app responds to proxy, because they do not run AWS ELB on their laptop, but production is behind it. You get the idea.

When developer says it works okay on their laptop, what it usually means is the they do not have 100% copy of production locally (because obviously they don’t) and that as a result they didn’t encounter this specific failure mode.

Which is not to say, that Nix is bad idea. Nix is great. I’m just saying that there’s more to the “laptop problem” than just reproducible builds - we had those even before Docker Images.

Hope that makes sense. And again, thanks for civil discussion.

I only remember doing this with FireWire. Which model supported target disk mode over USB-A?