🇨🇦

- 3 Posts

- 217 Comments

Been using this for a while now. Not an extension, but a good bookmark to have.

Bit of a different solution:

If Paperless-NGX is one of the things you self-host; it has options to import emails based on your specified criteria, then you could have it delete each piece of mail it imports. You can also just have it move mail to folders on the mail server, or just tag/flag mail instead of deleting it. (for you to then manually delete at your leisure)

I use this to automatically import receipts, bills, work documents, and any other regular mail instead of dealing with it manually every week/month.

I’ve always used dd + sshfs to backup the entire sd card daily at midnight to an ssh server; retaining 2 weeks of backups.

Should the card die, I’ve just gotta write the last backup to a new card and pop it in. If that one’s not good, I’ve got 13 others I can try.

I’ve only had to use it once and that went smoothly. I’ve tested half a dozen backups though and no issues there either.

Last time I looked at the topic (several years ago in a now deleted reddit post); someone had posted info on the projector system.

The media is delivered on a battery backed up rack-mount pc with proprietary connectors and a dozen anti-tamper switches in the case. If it detects meddling; it wipes itself. You’re not likely to grab a copy from there.

As the other commenter mentioned; the projector and media are heavily protected with DRM, encrypting the stream all the way up to the projector itself. You can pull an audio feed off the sound board; but you’re stuck with a camera for video.

I haven’t actually tried myself; but I’ve read many DRMs can be defeated by simply running the service in a VM, then screen recording the VM from the host.

Try to directly screen capture Netflix for example, and the webpage will appear as a solid black box in the recording; but not if the capture is done from outside the VM.

Yeah… Becoming a public-facing file host for others to use seem rather irresponsible.

If/when a user’s given a means of uploading files to my server, there’s no method/permissions for them to share those files with others; it’s really just for them to send files to me. (Filebrowser is pretty good for that)

That and almost nothing is public access; auth or gtfo.

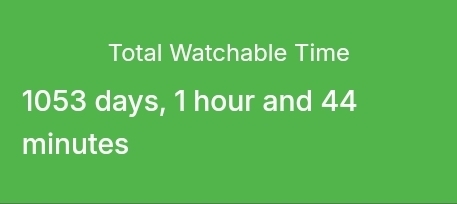

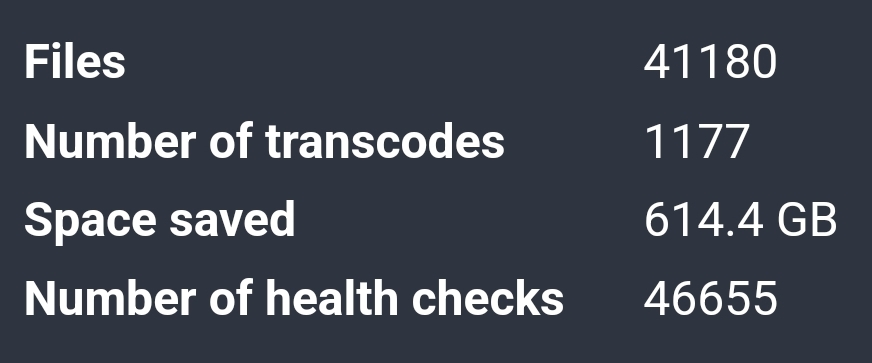

I used to use the built in convert options in Emby server, but recently switched to Tdarr to manage all my conversions. It’s got far more control/configurablity to encode your files exactly how you’d like.

It can also ‘health check’ files by transcoding them, but not saving the output; checking for errors during that process to ensure the file can actually be played through successfully. With 41k+ files to manage, that made it much easier to find and replace the dozen or so broken files I had, before I found them by trying to play them.

Fore warning; this is a long and intensive process. Converting my entire library to HEVC using an RTX 2080 took me over 2 months non-stop. (not including health checks)

4451 movies

398 series / 36130 episodes

Taking up 25.48tb after conversion to HEVC compressing it ~40%

Every series is monitored for new episodes which download automatically; and there’s a dozen or so public IMDB lists being monitored for new movies from studios/categories I like. Anything added to the lists gets downloaded automatically.

Then there’s Ombi gathering media requests from my friends/family to be passed to sonarr/radarr and downloaded.

At this point, the library continuously grows on its own, and I have to do little more than just tell it what I want to watch.

As far as I understand; it’s not the tools used that makes this illegal, but the realism/accuracy of the final product regardless of how it was produced.

If you were to have a high proficiency with manual Photoshop and produced similar quality fakes, you’d be committing the same crime(s)

creating child sex abuse images

and

offenses against their victims’ moral integrity

The thing is, AI tools are becoming more and more accessible to teens. Time, effort, and skill are no longer roadblocks to creating these images; which leaves very very little in an irresponsible teenagers way…

I tend to drop the link into yt1s.com

Sometimes just for audio, sometimes for the full vid.

I’m rarely grabbing more than one video at a time though.

[re-commenting as I meant this to be a top-level comment, not a reply]

A paid plex share is a plex server that someone is running + selling access too.

This is against plex’ terms, gets plex accounts banned; and in some cases, Plex (co) has taken rather drastic action by blocking entire VPS providers from reaching plex.tv; thus plex server software no longer functions on those VPS’s at all.

Naturally, people selling shares want to maximize profit, so they use VPS providers on the cheaper end; resulting in cheaper VPS solutions being blocked for everyone.

Drink less paranoia smoothie…

I’ve been self-hosting for almost a decade now; never bothered with any of the giants. Just a domain pointed at me, and an open port or two. Never had an issue.

Don’t expose anything you don’t share with others; monitor the things you do expose with tools like fail2ban. VPN into the LAN for access to everything else.

Sure, cloudflare provides other security benefits; but that’s not what OP was talking about. They just wanted/liked the plug+play aspect, which doesn’t need cloudflare.

Those ‘benefits’ are also really not necessary for the vast majority of self hosters. What are you hosting, from your home, that garners that kind of attention?

The only things I host from home are private services for myself or a very limited group; which, as far as ‘attacks’ goes, just gets the occasional script kiddy looking for exposed endpoints. Nothing that needs mitigation.

If they are injecting ads into the actual video stream; it won’t matter what client you use. You request the next video chunk for playback and get served a chunk filled with advertising video instead. The clients won’t be able to tell the difference unless they start analyzing the actual video frames. That’s an entirely server-side decision that clients can’t bypass.

Only if the ads are a fixed length and always in the same place for each playback of the same video.

Inserting ads of various lengths in varying places throughout the video will alter all the time stamps for every playback.

The 5th minute of the video might happen 5min after starting playback, or it could be 5min+a 2min ad break after starting. This could change from playback to playback; so basing ad/sponsor blocking on timestamps becomes entirely useless.

I have one more thought for you:

If downtime is your concern, you could always use a mixed approach. Run a daily backup system like I described, somewhat haphazard with everything still running. Then once a month at 4am or whatever, perform a more comprehensive backup, looping through each docker project and shutting them down before running the backup and bringing it all online again.

I setup borg around 4 months ago using option 1. I’ve messed around with it a bit, restoring a few backups, and haven’t run into any issues with corrupt/broken databases.

I just used the example script provided by borg, but modified it to include my docker data, and write info to a log file instead of the console.

Daily at midnight, a new backup of around 427gb of data is taken. At the moment that takes 2-15min to complete, depending on how much data has changed since yesterday; though the initial backup was closer to 45min. Then old backups are trimmed; Backups <24hr old are kept, along with 7 dailys, 3 weeklys, and 6 monthlys. Anything outside that scope gets deleted.

With the compression and de-duplication process borg does; the 15 backups I have so far (5.75tb of data) currently take up 255.74gb of space. 10/10 would recommend on that aspect alone.

/edit, one note: I’m not backing up Docker volumes directly, though you could just fine. Anything I want backed up lives in a regular folder that’s then bind mounted to a docker container. (including things like paperless-ngxs databases)

They weren’t storing your name in the first place; they’ve acquired a new service ‘blowfish’ for which an account is automatically created for you if you currently or in the past have used glassdoor. Blowfish demands a real name to be used at all. (including to delete your account)

Ontop of this, after linking the two services on your behalf; glassdoor will now automatically populate your real name and any other information they can gleam from blowfish, your resumes, and any other sources they can find, regardless of whether the information is correct (users have reported lots of incorrect changes). This is new.

English

English- •

- 6M

- •

English

English- •

- 7M

- •

English

English- •

- 1Y

- •

I found my works wifi blocks most ports outbound, but switching my my vpn to a more ‘standard’ port like 80, 443, 22, etc gets through just fine.

Now I’ve got a couple port forwarding rules I can switch on, as needed, that take one of those and route it to my vpn host.