Plutus, Haskell, Nix, Purescript, Swift/Kotlin. laser-focused on FP: formality, purity, and totality; repulsed by pragmatic, unsafe, “move fast and break things” approaches

AC24 1DE5 AE92 3B37 E584 02BA AAF9 795E 393B 4DA0

- 2 Posts

- 69 Comments

It’s still fairly challenging and the documentation is probably, at best, dogshit (if I may be so blunt) at the moment.

OCI is probably a more worthy goal anyway, IMO. And it is unsurprisingly much more well-supported.

I think some of these replies have perhaps missed the powerful idea that made me fall in love with Eelco Dolstra’s idea. Here’s what won me over.

For example: THE main feature is that you could have a different version of say Python (for the sake of this example) installed for each dependency in your system. Let’s say you had Brave working with one version of Python and another piece of software needed a previous version of Python. In an FHS style system, this would be challenging and you’d have to manually patch things to make sure the dependencies didn’t step on eachother. When you updated, your patches would likely have to be changed as well. So, system administration and updating can really break things.

In a Nix store where things can be content-addressed and linked by symlinks to their specific dependencies, they would just work alongside each other due to their unique, hash based folder locations. Each folder in the Nix store is named based on the sha256 hash of that piece of software’s ENTIRE dependency graph, which has powerful implications.

Because of this hash, they’re effectively hermetically sealed from each other and cannot step on each other. The software in the Nix store talks to eachother through symlimks that were made upon compilation of the system.

This is the very definition of Nix and taken far enough to define a whole OS is SUPER powerful concept.

I’d actually argue the opposite in regards to clutter. If I switch to a new config without the software I don’t want anymore, that software goes away entirely when I do a garbage collect and there’s nothing left over like there might be in ‘’~/.config’’ on a non-immutable system.

IMO, the actual realization of Dolstra’s dream is flakes and home manager. They allow you to boil your whole config down to a git repo where you can track changes and rollback the lock file if needed.

I find it nice to open my config in an IDE and search by string inside of my config where I can comment out whatever I don’t need. Laziness also makes that pretty convenient too. Nix will only attempt to interpret what is accessible in code. If I comment out an import, that whole part of the config seamlessly shuts off. It’s quite elegant.

I’m even more envious of the atomicity of GUIX but IMO, it’s a little too much building the world from scratch for a newb like me.

I HIGHLY recommend forking a nix-config that uses flakes, home-manager, and whatever window manager you prefer. Since Nix is so versatile (and the documentation of flakes and home-manager are BAD), I found it absolutely crucial to reuse a well-architected config and slowly modify it in a VM to sketch out my config until it was stable enough to try on a real machine.

Clearly, it’s not a skill issue with you but with the dude that inspired this, my assessment was that he was flat out unwilling to learn and flat out unwilling to acknowledge that there are clearly some benefits to this style. Seems like you already grasp it but don’t feel like committing the time. I respect that much more than the blind dismissal that inspired my meme. ✌️

nd of interesting shit you can do in Nix, at one point I had zfs and ipfs entries in one of my configs. I got away from it all before flakes started to get popular.

I tried it as a docker host; the declarative formatting drove me around the bend. I get a fair bit of disaster proofing on my docker host with git and webhooks, besides us

I suspect that the whole Docker thing will improve exponentially now that Nix is on the Docker’s radar. I found the OCI implementation to be superior to the actual Docker implementation in Nix…at least for now. I think the way that Docker isolates things to layers is the biggest barrier to them working together seamlessly at the moment…but I think they’ll start to converge technolgically over the coming 10 years to the point where they might work together as a standard someday.

to try to replicate my current desktop in an immutable model, it would involve a lot of manual labour in scripting or checkpointing every time I installed or configured something, to save a few hours of labour in 2 years time when I get a new drive or do a full install.

If you have only one system, you might find the benefits not to be worth the bikeshedding effort.

However, I suspect that you’d be surprised with how easy it can be using home-manager. I have literally nothing that I need to do to a newly compiled NixOS system from my config because EVERYTHING is declared and provided inside of that config.

If you don’t mind, can you give me an example of something in your config that you think is impossible or difficult to port to the Nix style? I’d be happy to attempt to Nixify it to prove my point. I’ve pretty much figured out how to do everything in the Nix way.

and I don’t mind if I end up being incredibly wrong on this point and promise to be intellectually honest about it if I am indeed wrong. It just sounds like a fun exercise for me.

for a user that isn’t trying to maintain a dev environment, it’s a bloody lot of hassle

I agree but I prefer it to things like ansible for sure. I’m also happy to never have to run 400 apt install commands in a specific order lest I have to start again from scratch on a new system.

Another place I swear by it is in the declaration of drives. I used to have to use a bash script on boot that would update fstab every time I booted (I mount an NFS volume in my LAN as if it were native to my machine) then unmount it on shutdown. With nix, I haven’t had to invent solutions for that weird quirk (and any other quirks) since day one because I simply declared it like so:

{

config,

lib,

pkgs,

inputs,

...

}: {

fileSystems."/boot" = {

device = "/dev/disk/by-uuid/bort";

fsType = "vfat";

};

fileSystems."/" = {

device = "/dev/disk/by-uuid/lisa";

fsType = "ext4";

};

swapDevices = [

{device = "/dev/disk/by-uuid/homer";}

];

fileSystems."/home/mrskinner/video" = {

device = "192.168.8.130:/volume/video";

options = ["x-systemd.automount" "noauto"];

fsType = "nfs";

};

fileSystems."/home/mrskinner/Programming" = {

device = "192.168.8.130:/volume/Programming";

options = ["x-systemd.automount" "noauto"];

fsType = "nfs";

};

fileSystems."/home/mrskinner/music" = {

device = "192.168.8.130:/volume/music";

options = ["x-systemd.automount" "noauto"];

fsType = "nfs";

};

}

IMO, where they really shine is in the context of declarative dev environments where the dependencies can be locked in place FOREVER if needed. I even use Nix to build OCI/Docker containers with their definitions declared right inside of my dev flake for situations where I have to work with people who hate the Nix way.

I really didn’t declare myself the winner. IMO, I won’t have to when the software will do that when this way of working usurps container-style development as the de-facto standard.

As an actual old man who was able to adapt, I simply pointed out that OP sounds like an old man, unable to acknowledge an obvious trend where immutable systems are clearly gaining popularity and are seen by many as the correct way to provision a mission-critical system.

English

English- •

- infosec.pub

- •

- 1M

- •

RISC-V is an open instruction set, which should be what the Pi foundation (if their open source mission is to be taken at face value) would be switching to if they weren’t just a way for Broadcom to push their chips on the maker community under the guise of open source.

RISC-V, an open-source instruction set architecture (ISA), has been making waves in the world of computer architecture. “RISC-V” stands for Reduced Instruction Set Computing (RISC) and the “V” represents the fifth version of the RISC architecture. Unlike proprietary architectures such as ARM and x86, RISC-V is an open standard, allowing anyone to implement it without the need for licensing fees. This openness has led to a surge in interest and adoption across various industries, making RISC-V a key player in the evolving landscape of computing. At its core, an instruction set architecture defines the interface between software and hardware, dictating how a processor executes instructions. RISC-V follows the principles of RISC, emphasizing simplicity and efficiency in instruction execution. This simplicity facilitates easier chip design, reduces complexity, and allows for more straightforward optimization of hardware and software interactions. This stands in contrast to Complex Instruction Set Computing (CISC) architectures, which have more elaborate and versatile instructions, often resulting in more complex hardware designs. The open nature of RISC-V is one of its most significant strengths. The ISA is maintained by the RISC-V Foundation, a non-profit organization that oversees its development and evolution. The RISC-V Foundation owns, maintains, and publishes the RISC-V Instruction Set Architecture (ISA), an open standard for processor design. The RISC-V Foundation was founded in 2015 and comprises more than 200 members from various sectors of the industry and academia.

They’ve been declining for years. It’s time the community ditched them for RISC-V machines.

Is docker even declarative?

Yes (though not as deterministic as Nix).

Also you can build docker images from nix derivations

Yes. I know.

I’d definitely be interested.

I did something like this a while back when I attempted to create an official Cardano dev/Stake Pool operator machine. I ended up realizing that a whole config is too personal to try an standardize but parts of my shared configs DID help other Cardano devs and Stake pool operators get a rock-solid Cardano dev/SPO setup that could be cloned into a myraid of different types of machines and configs.

I suppose, if it comes to that, nothing is stopping devs like me from hard-forking Cardano’s open source code and launching that fork with a 100% public initial token allocation. I’ve honestly already been considering that option for a while now.

Hopefully you’re wrong and it never comes to that… but I’d be ready if it did, for sure.

Interesting. I like your idealism.

As a dev myself, it’s a pretty hard sell to get collaborators as it is. I can’t imagine that I’d be able to find ANY if I were to roll my entirely own cryptocurrency with fully realized tokenmonics on consensus algorithm on day one. Sounds like your idea couldn’t possibly be implemented in a staking context as well.

Do you know of any projects that did it this way (Bitcoin, IMO, doesn’t count since the devs DID end up taking a huge stake in it for themselves)

Do you count the core devs (who maintain/write the core protocol), the foundation (preferably a DAO or voting based organization that is responsible for earmarking the funding for the upgrades and projects for the community), and treasury (that distributes mining/staking rewards and collects the fees for later distribution) as fair distribution? I do (depending on the structure of the aforementioned organizations obviously). Just wondering where you sit on that issue and any of projects that you can point to that have that ideal token allocation you speak of.

I haven’t found a more fair ITA than Ergo but then again, the Ergo project seems to have trouble paying/attracting really solid core devs other than the founder, Kushti.

Edit: took out some mention of my favorite project. Want to be impartial here.

🚩🚩🚩🚩🚩🚩🚩🚩🚩🚩

As a crypto enthusiast and DApp dev, IMO this (and indeed most crypto projects especially ones that are really nothing more than ERC-20 or BRC-20 tokens) raises major red flags for me. IMO, it is sketchy moonboi shit.

If a hype-drenched crypto project is brand new and is already aggressively marketing itself with no discernible real world utility or reason for the disbursement of “governance tokens”, solely fixated on “number go up”, it is usually a scam/pump and dump.

Taking a look at the Initial Token Allocation for this project definitively allows me to make conclusions about the team’s honesty and trustworthiness.

So without further ado:

check out the super sketchy token allocation (they try to break up different groups of insiders to try and hide the fact that most of the token goes to insiders and private investors…compare that to Ergo and it seems incredibly greedy in contrast):

17% — StarkWare Investors

32.9% — Core Contributors: StarkWare and its employees and consultants, and Starknet software developer partners

50.1% granted by StarkWare to the Foundation, earmarked as follows:

9% — Community Provisions — for those who performed work for Starknet and powered or developed its underlying technology, e.g. via past use of the StarkEx L2 systems. Crucially, all Community Provisions will be based on verifiable work that was performed in the past. For example, to the extent Community Provisions will be given to past StarkEx users, allocations will be determined based on verifiable usage of StarkEx’s technology that took place prior to June 1, 2022.

9% — Community Rebates — rebates in Starknet Tokens to partially cover the costs of onboarding to Starknet from Ethereum. To prevent gamification, Community Rebates will only apply to transactions that occur after the rebate mechanism is announced.

12% — Grants for research and work done to develop, test, deploy and maintain the Starknet protocol

10% — a strategic reserve, to fund ecosystem activities that are aligned with the Foundation’s mission as explained in the previous post in this series.

2% — Donations to highly regarded institutions and organizations, such as universities, NGOs, etc, as decided by Starknet Token holders and the Foundation.

8.1% Unallocated — the Foundation’s unallocated treasury is in place to further support the Starknet community in a manner to be decided by the community.

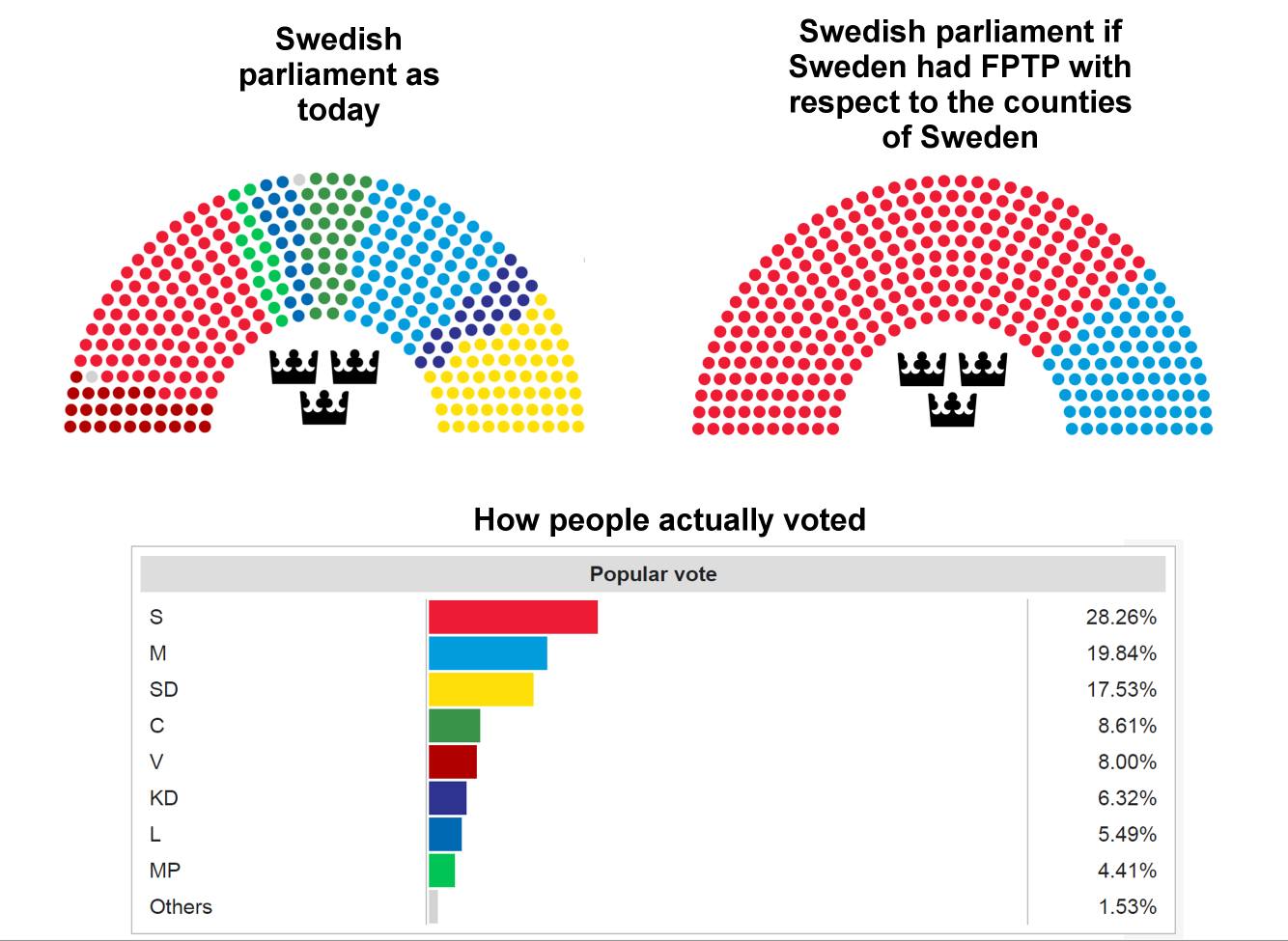

For one, FPTP doesn’t get enough credit for just how nefarious it is. And let me be clear: I am speaking of the presidential election specifically, though I’m sure this applies to many aspects of this “democracy” including state elections, etc that you mentioned.

Instead, the overarching establishment narrative likes to point the finger at the electoral college (which also quite heavily biases the power of votes toward voters in less populous areas and states).

Since the established oligarchs (who own the news outlets) tend to control information delivery in this country, how would you break through that wall? Would you engage in peaceful protest (or self immolation)? Well, they’ve got a playbook for that too. They will discredit you and make you seem unhinged. For proof of that, look at the way they’re delegitimizing the brave, selfless active duty Air Force member who engaged in peaceful protest by self immolation outside of the Israeli embassy against the Gaza war for just how easy it is. Most news watching voters probably already think he was crazy. It didn’t quite have the power that that act had during, say, the Vietnam war.

Since you’re here, I’m guessing you’re a software engineer. Do me a favor: Model the real world dynamics of a US presidential election using domain driven design, making sure to accurately represent the two objectively unfair stages:

Rule #1: All candidates must pass stage 1 to be eligible for stage 2.

In stage 1, feel free to cheat, commit fraud, and engage in any strategy you need to prevent anyone but the owners of the party’s preferred candidates from winning. After all, your party is a private organization that can engage in whatever unfair tactic they deem necessary.

In stage 2, (if you’re paying attention, you ALREADY have irreparably biased the possible outcomes by cheating in stage 1) because of FPTP, you can now simply choose between only two of the MANY, MANY parties.

If you designed a system like that as a software engineer, your colleagues would be at your throat about how flawed that design was. However, here in the US, that’s just the design of our dEMoCrACy oF tHa gReATeSt cOuNTry iN tHa wErLD! 🥴

Edit: That “much worse” statement seems to come from a place of privilege. Don’t forget that. RIGHT NOW, more people are homeless and destitute than any other time in MY LIFE (I was born in the late 70’s). And most people literally have no say in whether or not their tax money is being used to genocide Muslims overseas to make room for a puppet government. Remember: You’re an anti-Semite if you oppose genocide.

Don’t blame the victims for a sham of a democracy. First-past-the-post (FPTP) is there to prevent anything outside of a two party system where primaries are filled with (fully allowed) election fraud and cheating.

“we could have voluntarily decided that, Look, we’re gonna go into back rooms like they used to and smoke cigars and pick the candidate that way. That’s not the way it was done. But they could have. And that would have also been their right.” - DNC Lawyer

…and that’s why it is wise to use monadic parsers at the input boundary.

Thanks for that explanation. That makes sense.

I guess I should also mention IHP.

As far as npm and Purescript goes: I don’t interact with npm when I use it. I use nix and nixpkgs to build it which pulls packages from a predefined, fixed package set inside of Pursuit (Purescript’s package manager that feels a whole lot like Stackage). I suppose if I wanted to use it in the real world, I’d probably have to expose myself to some npm. But, from my cursory understanding and experience, it is probably less tainted by the safety issues in the npm than straight up JavaScript. Packages in Pursuit (particularly the ones exposed in the standard package set I use) tend to be super high quality code like the modules you’d find in GHC.

AFAIK, Halogen for Purescript is about as FP as it gets in the front-end world. It’s pretty complicated but Halogen takes advantage of “free monads”. Perhaps you are looking for something even more rigorous since you mentioned Idris but I figured I’d mention that one.

I’m learning it now and it has not been easy. I tried to port a simple vanilla JavaScript dynamic draggable table over to Halogen and it has not been fun at all. I got 80% of the way there then started to have to reach for FFI, unsafeCoerce, or build my own Purescript module to capture mousevents.

They simply want to make money for not creating any new content. They want subscribers not customers. They are currently in a new mode: rent seeking.

Pirate until they get their act together and offer something that has all content available, simply dividing up the proceeds fairly by percentage of viewing time.

Piracy is an availability problem. These assholes built 200 walled-gardens and are wondering why people don’t go to theirs.