I’m Hunter Perrin. I’m a software engineer.

I wrote an email service: https://port87.com

I write free software: https://github.com/sciactive

- 7 Posts

- 199 Comments

You can buy a super cheap cloud VM and use a (self hosted) VPN so it can access your own PC and a reverse proxy to forward all incoming requests to your own PC behind your school’s network.

It’s arguable whether this would violate their policy, since you are technically hosting something, but not accessible on the internet from their IP. So if you wanna be safe, don’t do this, otherwise, that could help you get started.

If it’s just hours, that’s fine. I’ve spent months on a system before that ultimately got scrapped. When I was at Google, they accidentally had two teams working on basically the same project. The other team, with about 40 engineers, having worked on it for about a year, had their project scrapped. My team was meant to do the same work, with about 23 engineers. So if you’re ever wondering why Hangouts Chat launched kinda half baked, that’s why.

What I use for a lot of my sites is SvelteKit. It has a static site generator. If you like writing the HTML by hand, it’s great. Also HTML5 Up is where I get my templates. I made the https://nymph.io website this way. And https://sveltematerialui.com.

If you want cheap encrypted storage you can run a Nephele server with encryption and something like Backblaze B2.

The way I’ve done it is Ubuntu Server with a bunch of Docker Compose stacks for each service I run. Then they all get their own subdomain which all runs through the Nginx Proxy Manager service to forward to the right port. The Portainer service lets me inspect things and poke around, but I don’t manage anything through it. I want it all to be super portable, so if Ubuntu Server becomes too annoying, I can pack it all up and plop it into something like Fedora Server.

I’m working on one called Soteria. It’s still early in development, but I’m focusing on both privacy and cloud availability.

It uses any WebDAV store to upload footage, but it’s designed to work best with my own WebDAV server Nephele. This lets it upload footage to any S3 compatible blob storage, end to end encrypted.

That way if your cameras go offline, you can watch the last footage they were able to upload.

Like I said, it’s in early development, so it’s not yet ready to use, but I’m going to be putting more work into it soon and try to get it to a place where you can use it.

It works with any V4L2 compatible camera, so laptops, webcams, and Raspberry Pi cameras should all work.

USB-A male to USB-A male is not in any USB standard (not entirely true, but compliant cables are very rare and don’t connect voltage), and if you plug it into a device it’s not meant for, the behavior is entirely unspecified. It will probably do nothing. But it might fry your USB controller that is not expecting to receive voltage.

USB-C to USB-C is in the spec, and if you plug in two host devices, they won’t hurt each other. You can actually charge a host device over USB-C, unlike USB-A.

That’s why it isn’t ok. It’s not the same thing, it’s not in the standard, and it can even be dangerous (to the device).

I don’t think it’s (just) that. It’s also a different skill set to write documentation than code, and generally in these kind of open source projects, the people who write the code end up writing the documentation. Even in some commercial projects, the engineers end up writing the docs, because the higher ups don’t see that they’re different skill sets.

Sounds great to me. Us software devs need to eat, so I totally get trying to turn this into a profitable business model. I’m very happy that they’re not paywalling any features, but honestly, I’d be fine if they did. I’m probably going to pay either way. Immich has been awesome, and it’s gotten me off of my second to last Google app, Photos. If only there were a good alternative to YouTube…

Nothing is safe to run unless you write it yourself. You just have to trust the source. Sometimes that’s easy, like Red Hat, and sometimes that’s hard. Sometimes it bites you in the ass, and sometimes it doesn’t.

Docker is a good way to sandbox things, just be aware of the permissions and access you give a container. If you give it access to your network, that’s basically like letting the developer connect their computer to your wifi. It’s also not perfect, so again, you have to trust the source. Do some research, make sure they’re trustworthy.

Well I almost have a solution for you, but it’s not ready yet. I have a WebDAV server called Nephele, but I haven’t finished writing the CardDAV and CalDAV extensions for it. I should be done with it in a few months. (My priorities are on my commercial project right now, then back to open source stuff in a couple months.)

Because you have to manage it on your server and all your own machines, and it doesn’t provide any value if your server is hacked. It actually makes you less safe if your server is hacked, because then you can consider every machine that has that CA as compromised. There’s no reason to use HTTPS if you’re running your own CA. If you don’t trust your router, you shouldn’t trust anything you do on your network. Just use HTTP or use a port forward to localhost through ssh if you don’t trust your own network.

You don’t have to pay anyone to use HTTPS at home. Just use a free subdomain and HTTP validation for certbot.

Here’s an article on open source identity management solutions.

The easiest way to do it is to do it the right way with LetsEncrypt. The hardest way to do it is the wrong way, where you create your own CA, import it as a root CA into all of the machines you’ll be accessing your servers from, then create and sign your own certs using your CA to use in your servers.

My setup is pretty safe. Every day it copies the root file system to its RAID. It copies them into folders named after the day of the week, so I always have 7 days of root fs backups. From there, I manually backup the RAID to a PC at my parents’ house every few days. This is started from the remote PC so that if any sort of malware infects my server, it can’t infect the backups.

Yeah, that could work if I could switch to zfs. I’m also using the built in backup feature on Crafty to do backups, and it just makes zip files in a directory. I like it because I can run commands inside the Minecraft server before the backup to tell anyone who’s on the server that a backup is happening, but I’m sure there’s a way to do that from a shell script too. It’s the need for putting in years worth of old backups that makes my use case need something very specific though.

In the future I’m planning on making this work with S3 as the blob storage rather than the file system, so that’s something else that would make this stand out compared to FS based deduplication strategies (but that’s not built yet, so I can’t say that’s a differentiating feature yet). My ultimate goal is to have all my Minecraft backups deduplicated and stored in something like Backblaze, so I’m not taking up any space on my home server.

- @hperrin@lemmy.world to

English

English - •

- lemmy.world

- •

- 5M

- •

- @hperrin@lemmy.world to

English

English - •

- 8M

- •

- @hperrin@lemmy.world to

English

English - •

- lemmy.world

- •

- 8M

- •

- @hperrin@lemmy.world to

English

English - •

- 9M

- •

- @hperrin@lemmy.world to

English

English - •

- hub.docker.com

- •

- 9M

- •

- @hperrin@lemmy.world to

English

English - •

- 10M

- •

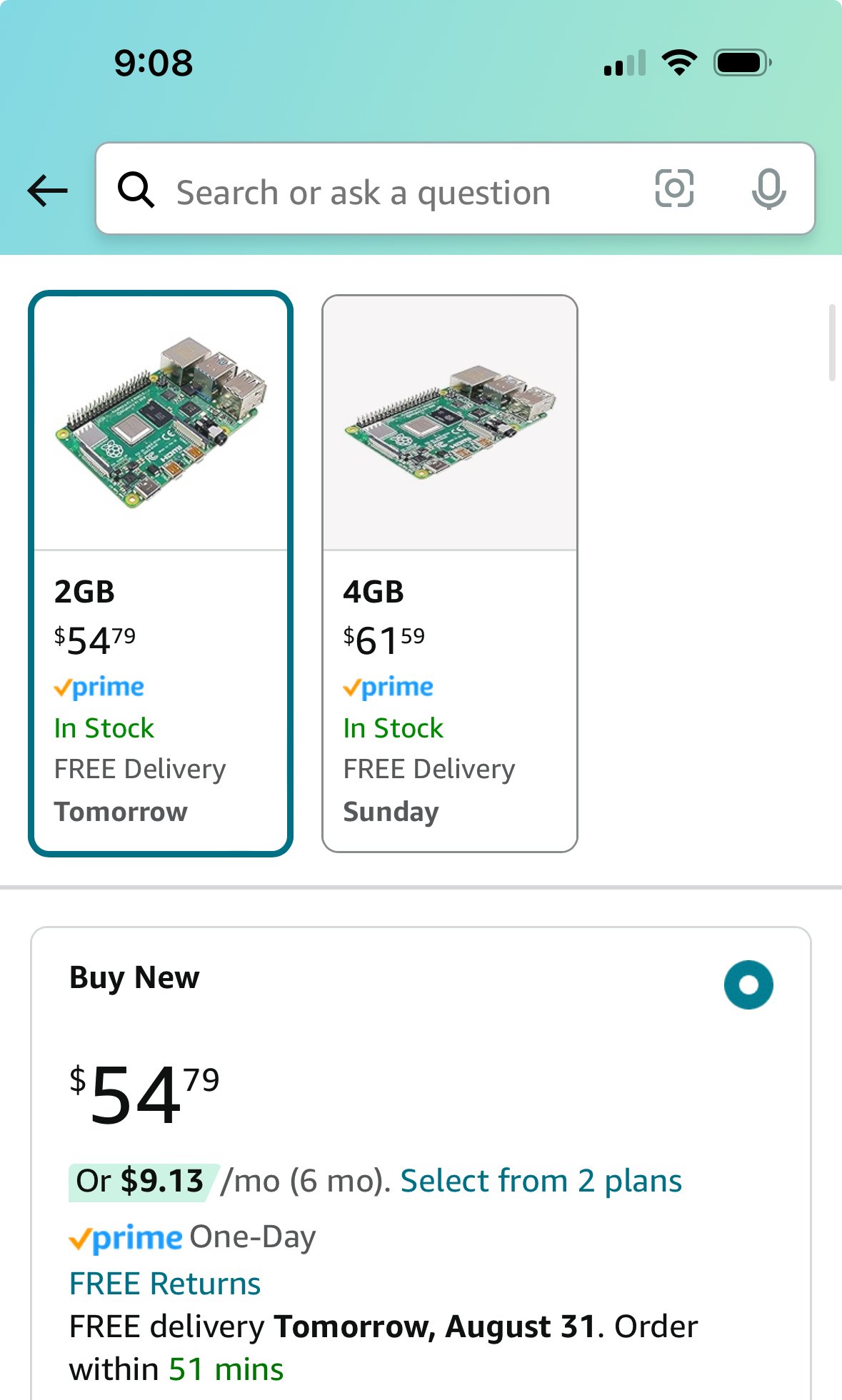

The cheapest one I know of is about $8 a month, so it should be affordable, even on a tight budget.