- 0 Posts

- 47 Comments

Yeah. I figured the day-of-the-month change should definitely happen at UTC midnight. I kindof like the idea that a day of the week lasts from before I wake up to after I go to sleep. (Or at least that there’s no changeover during business hours.)

But hell. If you wanted to run for president of the world on a platform of reforming date/time tracking but planned for the days of the week to change at midnight UTC, I’d still vote for you.

Note that the Sun position is not consistent throught the year and varies widely based on your latitude.

Good call. The definitions of “noon” and “midnight” would need to be formalized a bit more, but given any line of longitude, the sun passes directly over that line of longitude “exactly” once every 24 hours. (I put “exactly” in quotes because even that isn’t quite exactly true, but we account for that kind of thing with leap seconds.) So you could base noon on something like “when the sun is directly over a point on such longitudinal line (and then round to the nearest hour).”

Could still be a little weird near the poles, but I think that definition would still be sensical. If you’re way up north, for instance, and you’re in the summer period when the sun never sets, you still just figure out your longitude and figure when the sun passes directly over some point on that longitudinal line.

Though in practice, I’d suspect the area right around the poles would pretty much just need to just decide on something and go with it so they don’t end up having to do calculations to figure out whether it’s “afternoon” or “morning” every time they move a few feet. Heh. (Not that a lot of folks spend a lot of time that close to the poles.) Maybe they’d just decide arbitrarily that the current day of the week and period of the day are whatever they currently are in Greenwich. Or maybe even abandon the use og day of the week and period of the day all together.

Just the days of the week? you mean that 2024-06-30 23:59 and 2024-07-01 00:01 can both be the same weekday and at the same time be different days? Would the definition of “day” be different based on whether you are talking about “day of the week” vs “universal day”?

Yup.

I’m just thinking about things like scheduling dentist appointments at my local dentist. I’d think it would be less confusing for ordinary local interactions like that if we could say “next Wednesday at 20:00” rather than having to keep track of the fact that depending what period of the day it is (relative to landmarks like “dinner time” or “midmorning”) it may be a different day of the week.

And it’s not like there aren’t awkward mismatches beteen days of the week and days of the month now. Months don’t always start on the first day of the week, for instance. (Hell. We don’t even agree on what the first day of the week is.) “Weeks” are an artifact of lunar calendars. (And, to be fair, so are months.)

(And while we’re on the topic of months, we should have 13 of 'em. 12 of length 30 each and one at the end of 5 days or on leap years 6 days. And they should be called “first month”, “second month”, “third month”, etc. None of this “for weird historical reasons, October is the 10th month, even though the prefix ‘oct’ would seem to indicate it should be the 8th” bs. Lol.)

No, see, how it would work without timezones is:

- Everyone would use UTC and a 24-hour clock rather than AM/PM.

- If that means you eat breakfast at 1400 hours and go to bed around 400 hours and that the sun is directly overhead at 1700 hours (or something more random like 1737), fine. (Better than fine, actually!)

- Every area keeps track of what time of day daily events (like meals, when school starts or lets out, etc) happen. Though I think generally rounding to the nearest whole hour or, maybe in some cases, half hour makes the most sense. (And it’s not even like everyone in the same area keeps the same schedule as it is now.)

- You still call the period before when the sun is directly overhead “morning” and the period after “afternoon” and similarly with “evening”, “night”, “dawn”, “noon”, “midnight” etc.

- One caveat is that with this approach, the day-of-the-month change (when we switch from the 29th of the month to the 30th, for instance) happens at different times of the day (like, in the above example it would be close to 1900 hours) for different people. Oh well. People will get used to it. But I think it still makes the most sense to decide that the days of the week (“Monday”, “Tuesday”, etc) last from whatever time “midnight” is locally to the following midnight, again probably rounding to the nearest whole hour. (Now, you might be thinking "yeah, but that’s just timezones again. But consider those timezones. The way you’d figure out what day of the week it was would involve taking the longitude and rounding. Much simpler than having to keep a whole-ass database of all the data about all the different timezones. And it would only come into play when having to decide when the day of the week changes over.)

- Though, one more caveat. If you do that, then there has to be a longitudinal line where it’s always a different day of the week on one side than it is just on the other side. But that’s already the case today, so not really a drawback relative to what we have today.

The creator of DST gets the first slap. Then the timezones asshole.

I’m planning to do a presentation at work on how to deal with dates/times/timezones/conversion/etc in the next few weeks some time. I figure it would be a good topic to cover. I’m going to start my talk by saying “first, imagine there is no such thing as timezones or DST.” And then build on that.

I’ve seen someone code that way. Not since high school, but that’s a way that some people think coding works when they start out writing code.

This person was trying to write a game in (trigger warning: nostalgia) QBasic and had it drawing kindof a Pacman kind of character. And in pseudocode basically what he was doing was:

// Draw character with mouth open at (100, 100)

moveCursorTo(100, 100)

drawLineFromCursorAndMoveCursor(116, 100)

drawLineFromCursorAndMoveCursor(108, 108)

drawLineFromCursorAndMoveCursor(116, 116)

drawLineFromCursorAndMoveCursor(100, 116)

drawLineFromCursorAndMoveCursor(100, 100)

// Wait for half a second.

sleepSeconds(0.5)

// Draw character with mouth closed at (101, 100)

moveCursorTo(101, 100)

drawLineFromCursorAndMoveCursor(109, 100)

drawLineFromCursorAndMoveCursor(117, 108)

drawLineFromCursorAndMoveCursor(109, 116)

drawLineFromCursorAndMoveCursor(101, 116)

drawLineFromCursorAndMoveCursor(101, 100)

// Wait for half a second.

sleepSeconds(0.5)

// Draw character with mouth open at (102, 100)

moveCursorTo(102, 100)

drawLineFromCursorAndMoveCursor(118, 100)

drawLineFromCursorAndMoveCursor(110, 108)

drawLineFromCursorAndMoveCursor(118, 116)

drawLineFromCursorAndMoveCursor(110, 116)

drawLineFromCursorAndMoveCursor(102, 100)

// Wait for half a second.

sleepSeconds(0.5)

...

He hadn’t gotten to the point of working in user controls. (Like “change direction to ‘up’ when user presses the ‘up’ key” or whatever.) And understandably had no idea how that would work if/when he got that far.

I was kindof chief architect for a project where I worked. I decided on (and got my team on board with the idea of) making it an SPA. Open-in-new-tab worked perfectly.

(One really nice thing about it was that we just made the backend a RESTful API that would be usable by both the JS front-end and any automated processes that needed to communicate with it. We developed a two-pronged permissions system that supported human-using-browser-logs-in-on-login-page-and-gets-cookie-with-session-id authentication and shared-secret-hashing-strategy authentication. We had role-based permissions on all the endpoints. And most of the API endpoints were used by both the JS front-end and other clients. Pretty nice.)

I quit that job and went somewhere else. And then 5 years later I reapplied and came back to basically the exact same position in charge of the same application. And when I came back, open-in-new-tab was broken. A couple of years later, it’s not fixed yet, but Imma start pushing harder for getting it fixed.

Regular audio CDs don’t have any DRM. (Unless it’s a data CD filled with audio files that have DRM or some such. But regular standard audio CDs that work in any CD player, there’s no DRM. The standard just doesn’t allow for any DRM.) And so the DMCA’s anticircumvention provisions wouldn’t apply to CDs.

But as for the Sony case you’re referencing, I’m not familiar with it, so I’ll have to do more research on that.

Of those three steps, step 2 is the illegal one. (Assuming we’re talking about music and not software.) Even if you never do step 3.

(Not saying things should be that way. Nor that it’s not difficult to enforce. Only that as the laws are today, even ripping a music CD to your hard drive without any intention to share the audio files or resell the CD, even if you never listen to the tracks from your computer, the act of making that “copy” infringes copyright.)

Edit: Oh, and I should mention this is the case for U.S. copyright. No idea about any other countries.

Go. It’ll be just different enough from what you have experience with to make you think about things differently (in a good way!) from now on. And it’s also a fantastically well-designed language that’s great for getting real work done. And it’s lightning fast as languages go, and compiles to an actual executable. Really a pleasure to work with. It’s my (no pun intended) go-to language for every new project I start. (Excluding what I write specifically for a paycheck. I don’t have a choice there.)

A lot of technologies started out as pirate technologies.

Cable TV? The first people who started shoving TV over cables into people’s homes didn’t ask for permission. But now that’s such a normal thing that we can’t imagine it having been infringement at one point.

Player piano rolls too. No permission was sought and its legality wasn’t figured out until they got sued. (And the courts decided that a royalty to the composers or rights holders was in order, and the courts set the going royalties rate in cents per roll, but they also decided the composers/rights holders couldn’t deny any player piano roll maker the right to make player piano rolls of their songs.)

But then things shifted and now the courts are owned by Disney.

Lol! Yes! I’d never made the connection, but yes “AntiMS” was a name I chose originally to mean “anti-Microsoft.” And I used Github until the day I heard that Microsoft was buying Github. (Actually, my Github is still out there. I just force-pushed to overwrite all the history of all my Github repos with a single readme file that says “I moved to Gitlab.”)

I started using that username back before I realized all corporations were evil. I thought it was just Microsoft back then. Simpler times.

Usually the one(s) I’m working on right at the moment.

But if I were to look back and decide which ones I still remember enjoying, a few come to mind.

For my day job, I’ve written two different (more or less) ORMs in my career. (For two different companies and in two different languages.) Pretty interesting stuff, that. The kind of project that you can sink your teeth into.

Neither one was a “I think I’ll write an ORM” kind of situation. They were more like “oh, given these requirements, I think probably I’ll need something that will work like X, Y, Z… oh, that’s an ORM. Ok then, let’s do this.”

Side-projects-wise, most of the side projects I’d talk about are here. (Yes, AntiMS is me even though different username.) “hydrogen_proxy” got way less fun when I got stuck for like a year on how to make HTTPS work. But aside from that it was a lot of fun to work on. “codecomic” is quite interesting to work on as well.

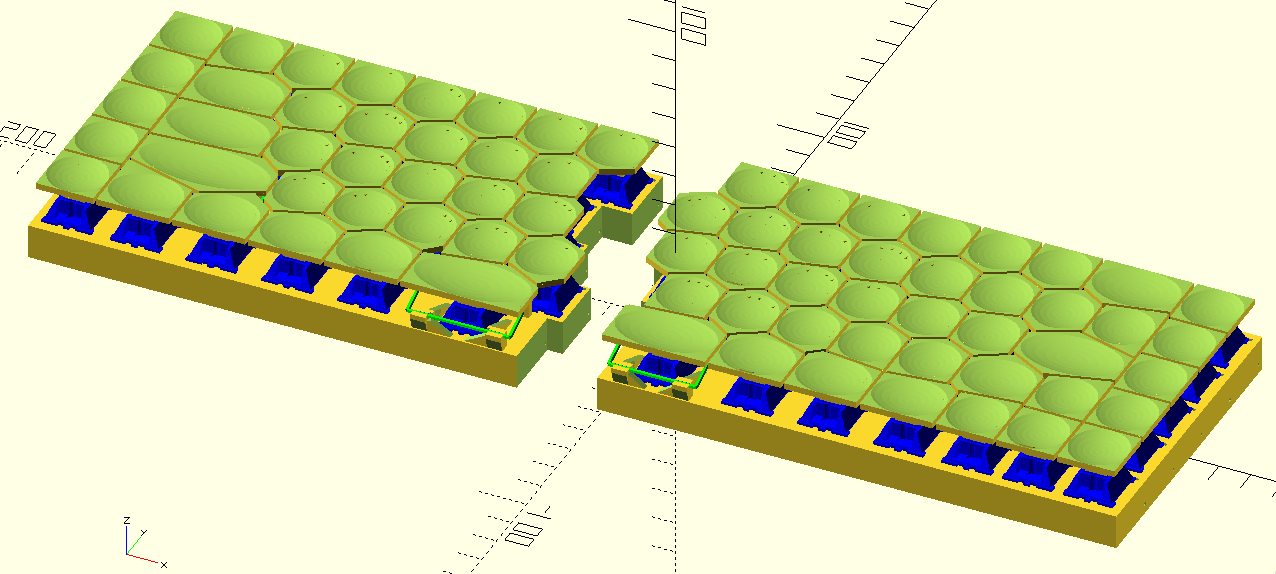

And the project I’ve been working on most recently is a very configurable framework written in a combination of Go and OpenSCAD for creating bespoke, 3d-printable mechanical keyboards including keycaps.

Here’s a preview of kindof roughly where I am with that mechanical keyboard project at the moment:

I’d rather see no commit message than an AI-generated one.

Also if I wasn’t misinterpreting OP, it sounded from the post I was responding to like OP provided a summary to the LLM along with code. If OP’s writing a summary anyway, why not just proofread that and use that as the commit message rather than involving an LLM in the middle of the process?

Even in a hypothetical where the company hired human tech writers to write commit messages for developers, I’d rather have in the commit message what the developer had to say rather than the possible misinterpretation of the tech writer.

Precision > concision && accuracy > concision. Just use your own wording as the commit message. I’d rather see an account of a code change from the viewpoint of the change’s author than a shorter reformulation, even if that reformulation did come from a human who knew the problem space and wasn’t prone to making shit up on the fly.

If you were on my team and I knew you were doing this, aside from all the other issues everybody else has mentioned in this thread, I’d be going out of my way not just to check every single one of your git commits (both code changes and commit messages) for inaccuracies but also also to find every possible reason to nit pick everything you committed.

You shouldn’t be using LLMs to write your git commits (messages or code changes.) They hallucinate. But if you are going to use them, you need to spend much more time proofreading what they output than you’d spend writing it yourself. Check every single word for errors. (And, honestly, make the text fit with the way other commits by your team read (assuming you are on a team).)

In short, if you’re going to use LLMs, DO NOT TRUST ANYTHING THEY GIVE YOU. And don’t be surprised if you get negative blowback from others for using them at all. Keep in mind what can happen if you trust LLMs.

SEO’s a dark art.

In general, the more other pages (and the more highly-ranked pages) link to a page, the higher that page will show up in Google. But that’s also quite an oversimplification. (I don’t know that the rules Google uses for deciding how to rank pages in search results are even public info.

I don’t think there’s much any one person (even the owner) of a site can do to make a site show up higher in Google. The owner of a site can make smart decisions about how to ensure the site gets users coming back and posting links on social media and such, but ultimately the way a page/site gets to show up in more Google searches is by being popular.

My advice: don’t focus on Google or SEO or anything. Focus on fostering a community that’s worth coming back to and worth linking to.

Reminds me of A Story About Magic.

An attitude I’ve seen a lot among software developers is that basically there aren’t “good languages” and “bad languages.” That all languages are equal and all criticisms of particular languages and all opinions that some particular language is “bad” are invalid.

I couldn’t disagree more.

The syntax, tooling, standard library, third-party libraries, documentation quality, language maintainers’ policies, etc are of course factors that can be considered when evaluating how “good” a language is. But definitely one of the biggest factors that should be considered is how assholeish the community around a particular language is.

A decade or two ago, Ruby developers had a reputation for being smug and assholeish. I can’t say I knew a statistically significant number of Ruby developers, but the ones I did know definitely embodied that stereotype. I’ve heard recently that the Rust community has similar issues.

The Rust language has some interesting features that have made me want to look deeper, but what I’ve heard about the community around Rust has so far kept me away.

I write Java for a paycheck, but for my side projects, Go is my (no pun intended) go-to language. I’ve heard nothing but good things about its community. I think I’ll stick with it for a while.

Do consider that Microsoft is not your friend and may pull evil legal and pricing tricks that fuck you over down the road. For instance, something like what Unity tried to pull recently.

Hell will freeze over before GCC or MinGW tries to pull something like that. (And even if they tried, someone could fork GCC or MinGW to ensure a free version remains available.)

I wrote my own VTT. Does that count?

Darn. I went into that article hoping to hear a hillarious article about some coder who insisted on using only his self-written buggy bubble sort implementation rather than the sort methods in the standard library or who they couldn’t get to quit deleting necessary features from the codebase.

Good story even so.

We have to go deeper.