- 6 Posts

- 334 Comments

Every once in a while security researchers would discover sophisticated exploits that would allow malwares to take over your computer via multimedia files, but those are actually rarely exploited in the wild by run off the mill malwares.

Unless you’re an important person being targeted by hackers and three letter agencies, your biggest source of threat is running infected programs from untrusted sources, e.g. cracks downloaded from random torrents or warez sites, shady sites serving ads that trick you to run some executables, etc.

Do they strip off HTTPS somehow?

Well yes, how else they can provide their services such as page caching, image optimizing, email address obfuscation, js minifications, ddos mitigation, etc unless they can see all data flowing between your server and your visitors in the clear?

Cloudflare is basically an MITM proxy. This blog post might be helpful if you want to know how mitm proxy works in general: https://vinodpattanshetti49.medium.com/how-the-mitm-proxy-works-8a329cc53fb

Remember when google was beloved by everyone back then when they’re still have “don’t be evil” motto? Cloudflare right now is like google back then: super useful, provides a lot of free services that would be expensive on other providers. But unlike google, if cloudflare go full evil in the future, the impact will be much larger because they’re an mitm proxy capable of seeing unencrypted traffics across all websites under their wing. Right now they’re serving ~30% of top 10,000 websites and growing.

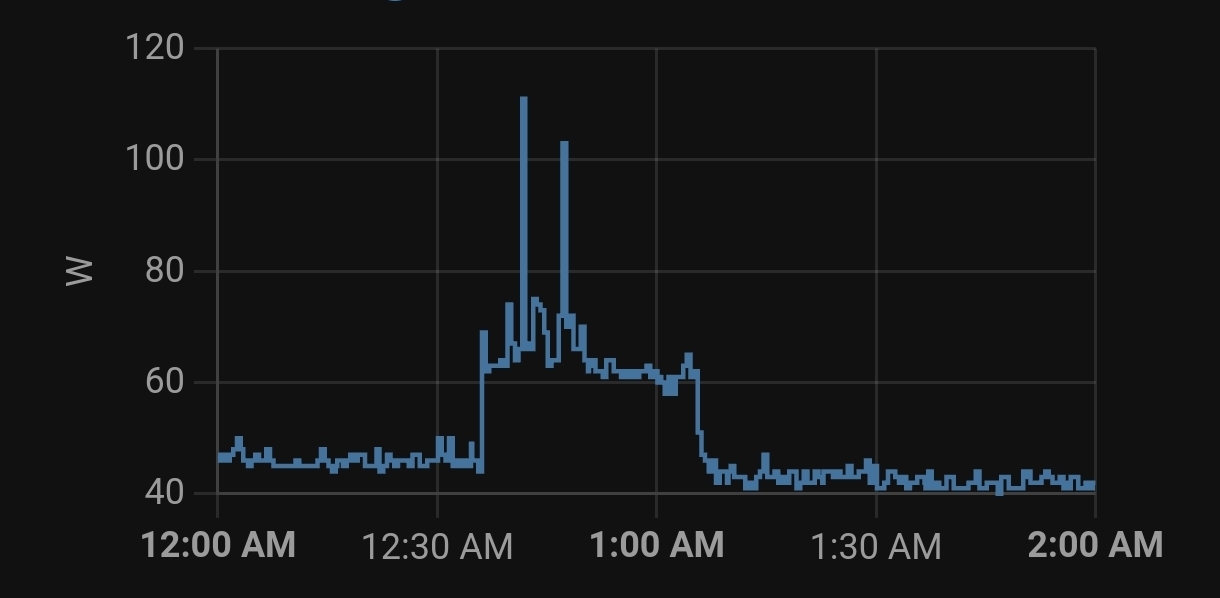

Xeon E5-2670, with 115W TDP, which means 2x115=230W for the processor alone. with 8 ram modules @ ~3W each, it’ll going to guzzle ~250W when under some loads, while screaming like a jet engine. Assuming $0.12/kwh, that’s $262.8 per year for electricity alone.

Would be great if you have an isolated server room to contain the noise and cheap electricity, but more modern workstation should use at least 1/4 of electricity or even less.

Google Reader was the best. Not sure why Google killed it, but it was really good at both content discovery and keeping up with sites you’re interested in. I tried several alternatives but nothing came close, so I gave up and hung out more on forums / link aggregators like slashdot, hacker news, reddit and now lemmy for content discovery. I’m also interested to hear what others use.

If this server is running Linux, you can use autossh to forward some ports in another server. In this example, they only use it to forward ssh port, but it can be used to forward any port you want: https://www.jeffgeerling.com/blog/2022/ssh-and-http-raspberry-pi-behind-cg-nat

By “remotely accessible”, do you mean remotely accessible to everyone or just you? If it’s just you, then you don’t need to setup a reverse proxy. You can use your router as a vpn gateway (assuming you have a static ip address) or you can use tailscale or zerotier.

If you want to make your services remotely accessible to everyone without using a vpn, then you’ll need to expose them to the world somehow. How to do that depends on whether you have a static ip address, or behind a CGNAT. If you have a static ip, you can route port 80 and 443 to your load balancer (e.g. nginx proxy manager), which works best if you have your own domain name so you can map each service to their own subdomain in the load balancer. If you’re behind a GCNAT, you’re going to need an external server/vps to route traffics to its port 80 and 443 into your home network, essentially granting you a static ip address.

I never heard of consumer apps doing this. I’m not familiar with foundry, but it seems their target audience are companies? Cracking hard on companies that use unlicensed copy is very common in b2b world. Microsoft, Oracle, etc all doing this to companies, threatening to “audit” them when they detect unlicensed uses from the company’s ip address.

Pirated apps are one of the top source for botnet operators to infect new machines and add them to their network. Try not to run any pirated app or game if you can, but if you can’t avoid it, get it from trusted sources (e.g. directly from the cracker’s homepage), not from random sources like TPB where anyone can upload anything.

I think you can send a SIGUSR1 signal to mumble process to tell it to reload the ssl certificate without actually restarting mumble’s process. You can use docker kill --signal="SIGUSR1" <container name or id>, but then you still need to give your user access to docker group. Maybe you can setup a monthly cron on root user to run that command every months?

Note that rsync.net includes free 7 days daily snapshot. Also, the main advantage over backblaze b2 for me is you can just sync a whole folder full of small files instead of compressing them into an archive first prior to uploading to a b2 bucket. This means you can access individual files later without the need to download the whole archive.

I still use b2 to store long term backup archives though.

I’m currently using nextcloud:26-apache from here because some nextcloud apps I use is not compatible with v27 and v28 yet. The apache version is actually less hassle to use because nextcloud can generate .htaccess configuration dynamically by itself, unlike php-fpm version where you have to maintain your own nginx configuration. The php-fpm version is supposedly faster and scale better though, but chance that you won’t see that benefits unless your server handles a large amount of traffics.

People usually come here looking for advice on how to replace their dockerized nextcloud setup with a bare-metal setup. Now you came along presenting a solution to do the reverse! Bravo!

What do you guys think about putting the different components (webserver, php, redis, etc.) in separate containers like this, as compared to all in one?

I actually has a similar setup, but with nextcloud apache container instead of php-fpm, and in rke2 instead of docker compose.

There are self-hosted runtime such as workerd that allows you to run your own stateless lambda-like platform. It’s kinda losing steam these days though, and everyone seems to be pushing self-hosted kubernetes as the best way to get off the cloud these days.

These days there are many solutions to deploy kubernetes on a fleet of bare-metal servers, so if you use kubernetes, the option to take everything in house again is available. Distributed storage are the toughest one to setup in house but there are many mature solutions that integrate with kubernetes well these days.

Thank you for the correction!